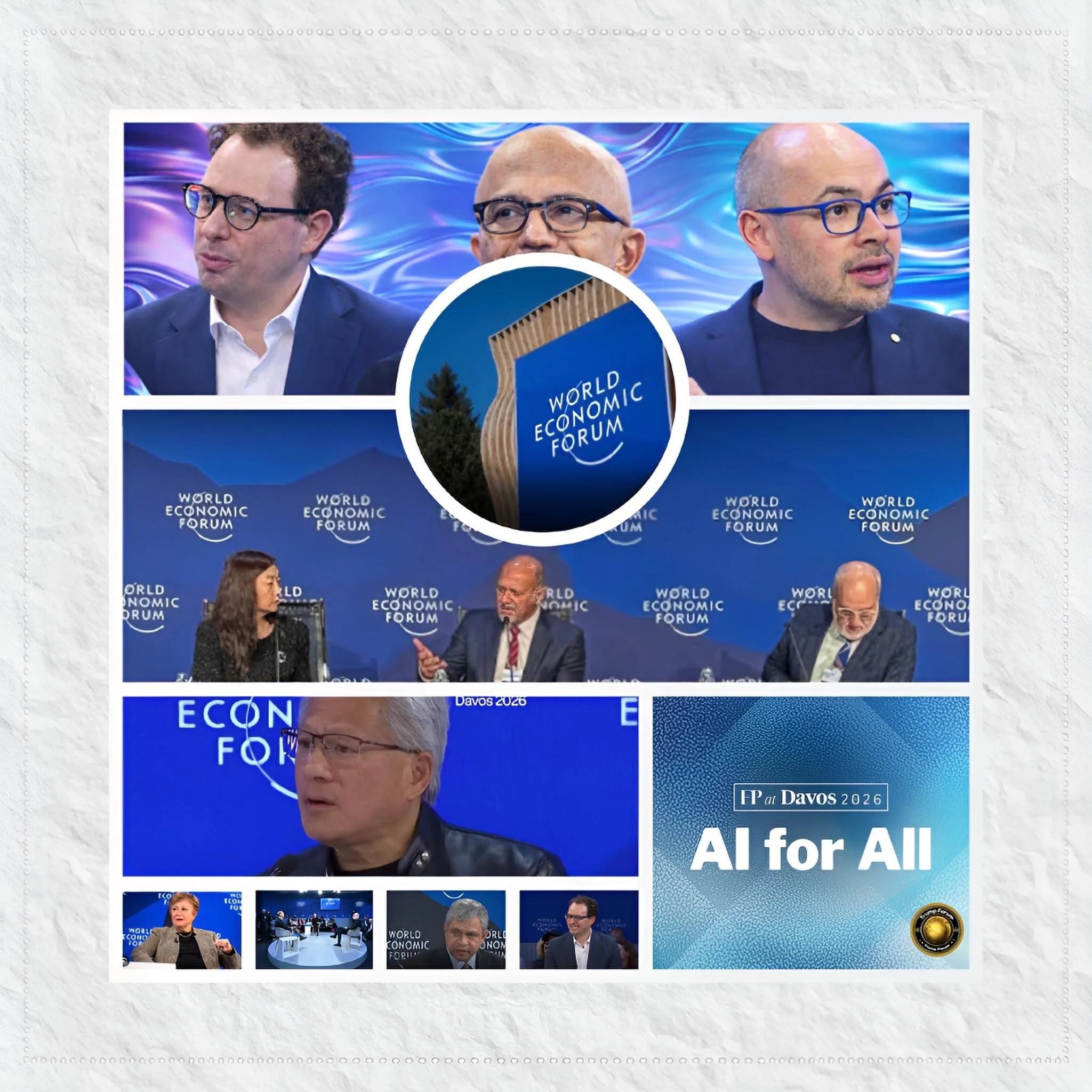

Top AI Leaders at Davos 2026: What They Said and Why It Matters

Executive Summary

Davos 2026 brought together big names in AI, including Jensen Huang of NVIDIA, Satya Nadella of Microsoft, and Elon Musk, to discuss AI's future. They shared ideas on how AI changes jobs, needs more energy, and raises safety worries. This article explains who they are, what happened before, new ideas, problems, why things connect, next moves, and what it all means in simple words with examples.

Introduction

Every year, leaders meet in Davos, Switzerland, for the World Economic Forum. In 2026, AI was the hot topic. People like tech bosses and thinkers discussed how AI is changing the world. For example, sessions like "Ethics in AI" had experts debating whether AI can be trusted. This meeting showed AI is not just tech—it's part of life, work, and global fights.

Think of Davos like a town hall meeting for world leaders, but in the mountains. Instead of local problems, they discuss significant global issues. This year, everyone wanted to discuss AI because it's moving so fast and affecting everything from your job to wars between countries.

History and Current Status

AI talks at Davos started small years ago. Back in 2018, it was about basic machine learning, like computers recognizing photos. By 2023, tools like ChatGPT made everyone excited. Now in 2026, AI is everywhere. NVIDIA's chips power big computer centers. Microsoft's tools help office workers write emails faster. Example: A doctor uses AI to spot diseases more quickly from X-rays.

Think of AI's growth like smartphone growth. In 2005, nobody had smartphones. In 2010, some people did. By 2015, everyone had them. Now AI is at that 2015 moment—growing fast and becoming part of everyday life.

Leaders like Huang called AI a massive building project, like making new power plants. When electricity was new, countries had to build power grids everywhere. Now, AI needs a similar massive buildout of computer centers. Microsoft's Nadella said companies see 30% faster work when they use AI—meaning one person does what used to take more people.

Key Developments

Big news came from the top speakers. Elon Musk told Larry Fink that by 2030, AI will be more intelligent than all humans together. He said we could build AI centers in space powered by solar energy. Think like this: Today, Google's computers need lots of electricity from power plants. But in space, solar panels never have clouds—just sun all the time. That could power computers up there.

Demis Hassabis from Google DeepMind talked about AI solving scientific problems, like developing new medicines faster. Before, scientists spent years discovering protein shapes. Now AI does it in weeks. Example: Fighting diseases starts with knowing what proteins look like. AI makes that first step much faster.

Dario Amodei from Anthropic said we're close to super-smart AI but need rules to govern it. Think about a powerful tool, like a knife, which is powerful for cooking but dangerous in the wrong hands. Amodei said AI needs similar safety guards.

Yann LeCun explained that AI still doesn't understand the real world well, like a kid learning physics. A child sees an apple fall and learns about gravity. Current AI systems read a lot of text but don't truly understand how the world works. LeCun wants AI to learn as children do.

Yoshua Bengio and Yuval Noah Harari asked whether more computer power is enough or whether we need more innovative designs. Think like this: Building more cars doesn't make cars faster—you need better engines. Same with AI. More computers might not make smarter AI; we might need different designs.

Andrew Ng pushed for free AI code so countries like India can join in without having to start from scratch. Right now, big companies like Google have the best AI tools locked away. Ng says sharing tools helps poorer countries catch up, so everyone benefits from AI, not just wealthy nations.

Latest Facts and Concerns

Facts shared: Jensen Huang said AI uses as much power as small countries. One computer center for AI training uses 100 megawatts—about the same as a city of 100,000 people.

Satya Nadella said companies see 30% faster work with AI, such as salespeople closing deals more quickly. Imagine: a salesperson usually visits 10 clients a week. With AI helping them prepare, they see 13 clients a week doing better work.

Demis Hassabis gave an example of AI designing proteins for new drugs. Scientists showed AI pictures of proteins. AI learned shapes. Now, scientists say, "I need a protein that fixes disease X," and AI designs it. Usually, this takes years and billions of dollars. AI does it in weeks.

But worries too. Max Tegmark and Meredith Whittaker said AI could take away our choices, such as fake videos that could fool elections. Imagine: Someone creates a video of your president saying illegal things. It's fake—made by computers. But people believe it. That's dangerous for elections.

Rachel Botsman worried about trust. Think like this: You trust a doctor because they went to school for years. But how do you trust an AI doctor you never met? What if it makes mistakes? People feel scared of things they don't understand.

The IMF's Kristalina Georgieva said 40% of jobs might change, such as cashiers being replaced by self-checkout. But also, new jobs come. Fifty years ago, "web developer" didn't exist. Now it's millions of jobs. Still, losing a job you're good at is scary, even if new jobs appear.

Cause-and-Effect Analysis

Things connect in chains. More powerful computers, such as NVIDIA chips, enable faster AI, leading to more intelligent chatbots. But that causes significant energy needs, so Saudi leaders want AI shared worldwide, like giving tools to poor countries. Here's the chain: Better chips → Faster AI → More energy needed → Sharing needed → Fairness.

No rules cause risks. The US and China, racing to build the best AI, are building separate AIs, like Cold War walls. Each creates their own, not talking to each other. The effect is that if AI systems have different values, they might fight like two armies.

Job changes happen because AI does thinking work. A lawyer used to write contracts by hand. Now AI writes drafts. The effect is new jobs like "AI trainer" (someone who teaches AI), but some people fall behind without training, like factory workers in the 1990s. New jobs aren't in the same places, so some workers suffer.

Future Steps

What to do next: Make global rules, such as AI safety treaties, so companies like Anthropic can build safe systems. Think like airlines—they have global rules so planes from different countries fly safely together.

Share tech openly. Andrew Ng says—India adding to free AI code stops big companies owning everything. Like smartphones—Apple made phones, but Google made Android, and suddenly, many companies made phones. Competition helped everyone.

Train workers. Schools need to teach AI use alongside reading. Like when cars came, people learned to drive. Now people need to know how to work with AI.

Fix energy: Musk's space ideas or more solar power on Earth. Countries need solar and wind power to run AI computers. Think like switching from coal to wind—it's cleaner and works better long-term.

Conclusion

Davos 2026 showed AI leaders agree: massive changes are coming, but we can guide it right. With smart steps, AI helps everyone, like better health and less poverty. Leaders like Huang and Nadella give hope if we act now.

The key point: AI is as powerful as electricity. Electricity changed everything—jobs, homes, hospitals. Some people feared it. But civilization managed it. We can manage AI the same way—by planning, sharing, protecting workers, and staying safe. Davos 2026 showed world leaders taking this seriously.