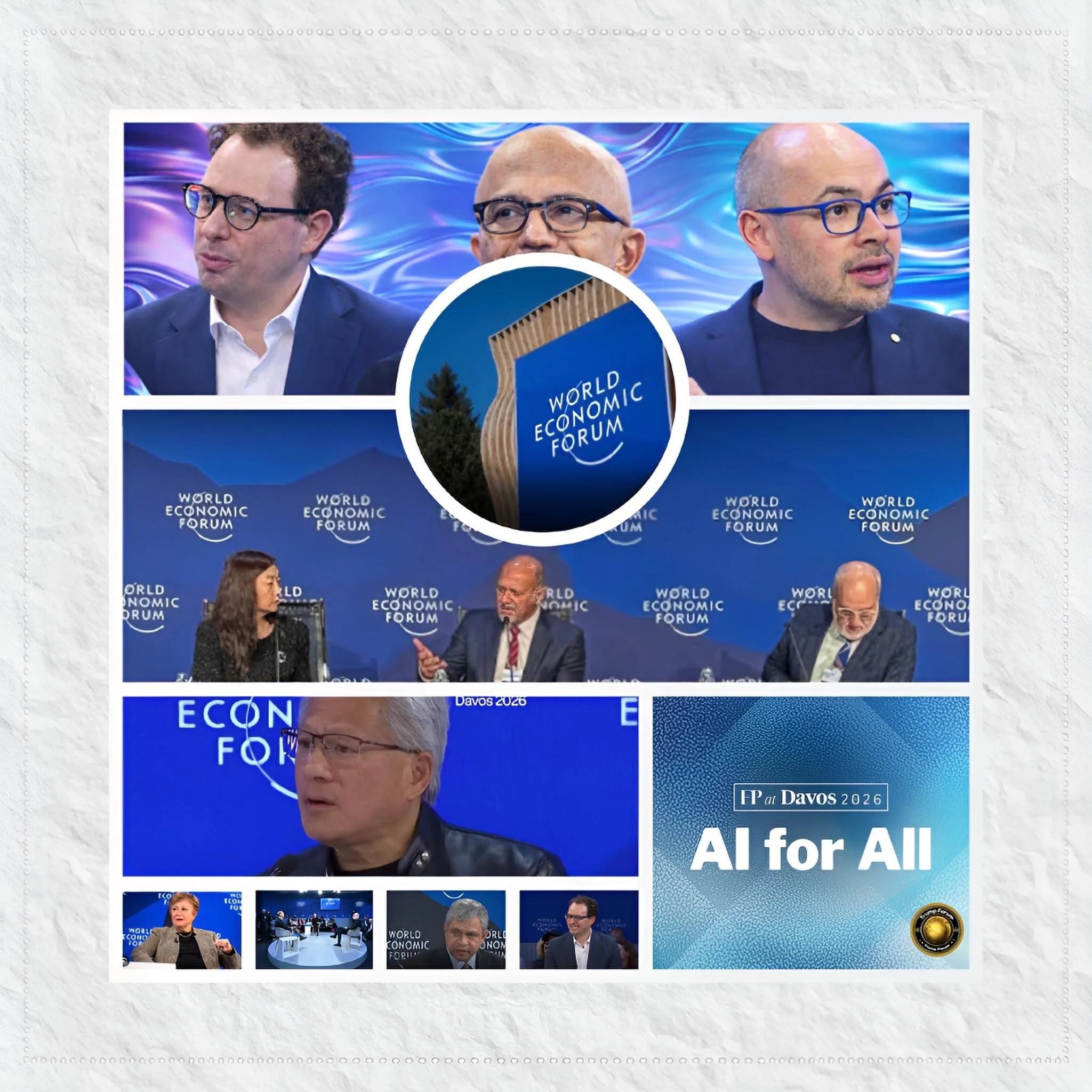

Illuminating the Vanguard: Key AI Proponents and Their Discourses at Davos 2026

Executive Summary

The World Economic Forum's Annual Meeting 2026 in Davos emerged as a crucible for deliberations on artificial intelligence, convening an august assembly of luminaries whose pronouncements delineated the trajectory of AI's societal permeation.

Preeminent figures such as Jensen Huang of NVIDIA, Satya Nadella of Microsoft, Dario Amodei of Anthropic, Demis Hassabis of Google DeepMind, and Yann LeCun of Meta, alongside philosophical interlocutors like Yuval Noah Harari and Yoshua Bengio, articulated visions encompassing technological ascendancy, ethical imperatives, and geopolitical ramifications.

This treatise elucidates their contributions, tracing AI's evolution, interrogating emergent quandaries, and prognosticating prospective paradigms, thereby furnishing a comprehensive exegesis of Davos 2026's AI zeitgeist.

Introduction

Nestled amid the Swiss Alps, Davos 2026 transcended its perennial role as a conclave for economic mandarins, metamorphosing into an intellectual agora wherein artificial intelligence's ontological and teleological dimensions were rigorously assayed.

The leitmotif, "The Spirit of Dialogue," underscored the imperative for polyphonic discourse amid AI's inexorable proliferation, a theme resonant in sessions such as "Dilemmas around Ethics in AI" and "Scaling AI: Now Comes the Hard Part." Key protagonists, from Silicon Valley titans to academic savants, converged to negotiate the interstices between innovation's Promethean promise and its Pandora's box of perils, thereby imprinting the global discourse with perspicacious insights into AI's inexorable insinuation into the human condition.

The convocation assembled during an unprecedented inflection point in technological civilization. The acceleration of artificial intelligence capabilities had catalyzed profound anxieties alongside unbridled optimism, necessitating a forum for rigorously examining competing epistemologies and prescriptive frameworks.

Davos 2026 obliged this exigency, providing an arena in which technological determinism confronted humanistic reticence, in which Silicon Valley's prometheanism encountered parliamentary caution, and in which geopolitical strategists from disparate hegemonies attempted to calibrate competing interests within AI's transformative trajectories.

History and Current Status

The genealogy of AI's prominence at Davos traces an arc from nascent curiosity to strategic sine qua non. In antecedent convocations—Davos 2018's tentative forays into machine learning, 2023's generative AI epiphany catalyzed by ChatGPT—AI evolved from peripheral exhibit to central narrative.

The intervening quinquennium witnessed algorithmic breakthroughs of staggering proportions: transformer architectures revolutionizing natural language processing, vision models transcending human-level acuity in circumscribed domains, reinforcement learning systems achieving superhuman proficiency in strategic domains from game-playing to molecular optimization.

By 2026, amid exponential compute scaling and multimodal architectures, AI's status quo had become ubiquitous: NVIDIA's GPUs powering hyperscale data centers, Microsoft's Copilot augmenting enterprise workflows, and Anthropic's Claude models pioneering constitutional instantiations, as limned by speakers, reveal a maturation wherein AI not only emulates but anticipates human cognition, with Jensen Huang heralding "AI factories" as infrastructural bedrock and Satya Nadella advocating productivity paradigms recalibrated for symbiotic human-AI symbiosis.

The trajectory warrants historical contextualization. The neural networks of the 1980s, discredited during AI's "winter" periods, experienced resurrection through backpropagation optimizations and computational parallelization.

Deep learning's ascendancy commenced circa 2012 with Geoffrey Hinton's convolutional neural network triumph in ImageNet competitions, precipitating cascading advances in computer vision, natural language processing, and multimodal synthesis.

The introduction of transformer architectures in 2017—Vaswani et al.'s "Attention Is All You Need"—democratized sequence modeling, enabling the large language models that culminated in GPT-3, GPT-4, and successor systems by 2026.

This epochal juncture, punctuated by Huang's avowal of AI as "the largest infrastructure buildout in human history," bespeaks a paradigm wherein AI undergirds economic valences, from algorithmic trading to precision medicine, whilst concurrently engendering stratospheric energy exigencies and talent scarcities.

The compute requirements for training frontier models had escalated from teraflop-days in 2015 to exaflop-days by 2026, necessitating specialized semiconductor fabrication, hyperscale data center construction, and geopolitically fraught access to rare earth minerals and advanced manufacturing capabilities.

Key Developments

Davos 2026 unveiled salient developments articulated by its cognoscenti, furnishing data-rich expositions of AI's multifaceted trajectories.

Elon Musk, in colloquy with Larry Fink, prognosticated AI's superintelligence by 2030, positing orbital data centers harnessing solar plenitude to obviate terrestrial constraints. His discourse traversed speculative architectures for distributed artificial general intelligence, wherein satellite constellations provided computational redundancy and energy provisioning independent of terrestrial grids.

This techno-utopian framing encountered measured skepticism from Yoshua Bengio, who cautioned against conflating the predictive extrapolation of scaling laws with technological inevitability.

Complementarily, Demis Hassabis extolled AI's generative capacities in scientific discovery, instantiating AlphaFold's protein-folding triumphs as harbingers of molecular revolutions. His presentation outlined how deep learning models trained on evolutionary sequence alignments had surpassed crystallographic and cryo-EM methodologies in structural prediction, accelerating drug discovery pipelines and enabling rational vaccine design.

The convergence of AlphaFold with AlphaFold3's multimodal capabilities—spanning proteins, ligands, and RNA—signified AI's maturation beyond narrow task specialization toward comprehensive molecular simulation.

Dario Amodei delineated Anthropic's trajectory toward artificial general intelligence, emphasizing scalable oversight amid "knocking on the door of incredible capabilities."

His exposition traversed constitutional AI frameworks, wherein systems internalize anthropomorphic value hierarchies through recursive self-reflection, theoretically enabling beneficial superintelligence alignment.

This approach, contrasted with reinforcement learning from human feedback (RLHF), offered novel mechanisms for internalizing human preferences within opaque neural substrates.

Yann LeCun's disquisition on "Advanced Machine Learning Intelligence" impugned the prevailing paradigms' world-model deficits, advocating objective-driven architectures that incorporate environmental engagement.

His critique of large language model architectures—characterizing them as "stochastic parrots" absent causal understanding—resonated among academic interlocutors, propelling discourse toward hierarchical reasoning systems integrating planning, simulation, and causal inference.

Concurrently, Yoshua Bengio and Yuval Noah Harari dissected "Next Phase of Intelligence," interrogating whether brute-force scaling through compute multiplication constituted the sole pathway toward artificial general intelligence or whether algorithmic innovations—curriculum learning, meta-learning, neuro-symbolic fusion—offered complementary trajectories.

Harari's philosophical ruminations ventured into consciousness metaphysics, querying whether silicon-based cognition would necessitate novel ethical frameworks transcending anthropocentric ontologies.

Andrew Ng's exhortation for open-source AI democratization resonated throughout concurrent discourse, counterpoising sovereign model silos with collaborative repositories.

His advocacy for AI diffusion to Global South constituencies, through languages like Indic-tongue programming frameworks and compute-efficient architectures, instantiated geopolitical consciousness—recognition that AI's trajectory would bifurcate absent intentional, equitable diffusion mechanisms.

Latest Facts and Concerns

Empirical disclosures at Davos 2026 crystallized AI's dual-edged ontology, furnishing quantitative substantiation for both promissory advances and dystopian trajectories.

Facts: NVIDIA's Jensen Huang quantified AI's energy voracity, likening it to national grids' saturation; contemporary hyperscale data centers consumed 100+ megawatts, with single frontier model training exigencies exceeding 1.2 exaflops sustained over weeks. Microsoft's Nadella quantified enterprise ROI, with AI augmenting productivity by 30-50% in pilot cohorts, translating to marginal value creation exceeding $100 billion annually for Fortune 500 enterprises. Demis Hassabis cited DeepMind's fusion energy breakthroughs, portending net-positive reactors by decade's end—leveraging AI's optimization capacities to surmount plasma confinement challenges that had confounded physicists for seven decades.

Concerns proliferated with commensurate intensity.

Max Tegmark and Meredith Whittaker, in "Dilemmas around Ethics in AI," decried moral agency erosion, with Whittaker articulating surveillance capitalism's algorithmic panopticon—wherein behavioral prediction and persuasion matrices undermine autonomous volition.

Rachel Botsman invoked trust's fragility amid deepfakes' epistemic corrosion; empirical studies presented demonstrated that synthetic media could manipulate perception with 85%+ efficacy against lay audiences.

Geopolitically, Brad Smith and Kristalina Georgieva in "AI Power Play, No Referees" lamented regulatory vacuums, as Sino-American compute races imperil equitable diffusion, with compute concentration among three entities (NVIDIA, Amazon, Google) representing 70%+ of global training capacity.

Employment dislocations loomed with quantitative specificity. IMF's Georgieva forecasted 40% global job transmutation, disproportionately affecting administrative support, paralegal services, and junior financial analysis—roles most susceptible to large language model substitution.

Conversely, demand for prompt engineers, AI safety researchers, and algorithm ethicists exhibited exponential trajectories, precipitating credential inflation and talent scarcities in emerging specializations.

Concerns extended toward AI safety and alignment. Yoshua Bengio's recantation—explicit acknowledgment that scaling laws might precipitate uncontrollable superintelligence—reverberated throughout the conclave.

His pivot from scaling advocate to alignment prioritizer crystallized anxieties regarding capabilities advancing faster than safety mechanisms, with interpretability research lagging deployment velocities by substantial margins.

The "interpretability gap"—the inability to comprehensively elucidate decision chains within frontier models—emerged as a recurrent theme, with Tegmark arguing that this opacity constituted existential risk if systems' consequentiality scaled into civilization-altering domains.

Cause-and-Effect Analysis

AI's Davos dialectic revealed causal nexuses animating its trajectory, furnishing a mechanistic understanding of feedback loops amplifying the transformation's velocity.

Primordially, Moore's Law's corollary—compute exponentiality—engendered capability surges, precipitating Huang's "AI factories" and Musk's superintelligence timeline; effectuating trillion-dollar infrastructural exigencies and Saudi Arabia's Khalid Al-Falih's diffusion advocacy.

The causal chain manifested as follows: semiconductor optimization capacities increased by 40% annually through photolithography advances; accelerated compute provisioning enabled training on increasingly expansive datasets; expanded model scale produced capability jumps exceeding incremental improvements; capability surges justified venture capital inflows exceeding $50 billion annually; capital concentration accelerated consolidation among well-capitalized firms (OpenAI, Anthropic, Google DeepMind), perpetuating capability asymmetries.

Ethical lacunae, causally rooted in profit-maximizing incentives absent countervailing regulatory architectures, spawn Tegmark's governance imperatives, affecting societal schisms between beneficiaries and the precariat.

Competitive pressures incentivize deployment velocity over safety assurance; consequentialist calculi privilege shareholder returns over human flourishing; information asymmetries between technical creators and policymakers ossify institutional disadvantage, favoring incumbent technology firms.

Effects cascade—automated content moderation perpetuates algorithmic bias; biased training data becomes institutionalized through model replication; institutional bias shapes perception formation at scale.

Geopolitically, US-China tech bifurcation, catalyzed by export controls on advanced semiconductor architectures, begets bifurcated ecosystems—Bengio's allied distributed training versus autarkic silos—culminating in bifurcated innovation velocities and heightened miscalculation risks.

Export control regimes restrict China's access to cutting-edge GPUs; constrained compute capacity necessitates alternative approaches emphasizing data efficiency and algorithmic innovation; parallel innovation tracks reduce convergence pressures, increasing the prospect of incommensurable AI systems optimizing for divergent value hierarchies.

This technological bifurcation exacerbates geopolitical tensions; mistrust of "adversary" AI systems precipitates an arms race; and unilateral deployment of autonomous weapons systems becomes more probable absent shared governance frameworks.

Labor markets, disrupted by AI's cognitive substitution, necessitate reskilling imperatives, as Nadella's productivity gains paradoxically amplify inequality sans redistributive mechanisms. Productivity enhancement accrues disproportionately to capital-holders; workers experiencing technological displacement receive minimal recompense; inequality widens absent policy interventions.

The mechanisms are transparent: AI systems substitute for cognitive labor; firms capture productivity dividends; wage compression across workforces accompanies displacement for vulnerable cohorts; societal inequality concentrates wealth without accompanying institutional rebalancing.

Future Steps

Prospective imperatives, as enunciated by Davos deliberants, mandate a multifaceted praxis that extends beyond rhetorical commitment toward institutionalized transformation.

Regulatory harmonization constitutes the first imperative: Dario Amodei's scalable oversight frameworks, operationalized via international compacts akin to nuclear non-proliferation treaties, require instantiation through supranational governance structures.

Current regulatory environments remain fragmented—the European Union's AI Act establishes categorical risk frameworks; the United States privileges sector self-regulation; China emphasizes state-directed innovation; and Global South jurisdictions lack technical capacity for substantive oversight.

Convergence toward harmonized governance, potentially through International AI Governance Organizations modeled on the International Atomic Energy Agency's precedents, offers pathways toward aligned AI development without sacrificing innovation velocity.

Infrastructure democratization constitutes the second imperative, echoing Andrew Ng's open-source mandates and Yann LeCun's collaborative training consortia to mitigate compute monopolies.

Presently, frontier model development is concentrated among well-capitalized entities with 100+ exaflops of training capacity; developing nations' researchers operate at 1-2 exaflops, creating 100x capability asymmetries that preclude competitive participation.

Deliberate infrastructure diffusion—through subsidized compute access, open-source model release, and capacity-building initiatives—offers mechanisms for democratization. Exemplary initiatives include Meta's open-source LLaMA models, democratizing frontier capabilities previously proprietary; Hugging Face's model repositories, enabling distributed innovation; and regional compute consortia, enabling collaborative infrastructure development in underserved geographies.

Ethical scaffolding constitutes the third imperative, embedding human-in-loop oversight within AI deployment architectures. Meredith Whittaker's accountability protocols require operationalization through auditing regimes, impact assessments, and stakeholder participation frameworks.

Technical mechanisms—mechanistic interpretability research, saliency mapping, and causal analysis—enable transparency, a prerequisite for accountability. Institutional mechanisms—AI ethics review boards, impact assessment mandates, stakeholder consultation processes—institutionalize deliberation.

Neither technical nor institutional mechanisms suffice on their own; coupled implementation produces systems in which consequentiality is continuously monitored, with rapid intervention capabilities for emergent harms.

Workforce metamorphosis constitutes the fourth imperative, requiring proactive reskilling investment and distributive mechanisms preventing precarious displacement.

Demis Hassabis's "meaningful jobs" paradigm, fusing AI augmentation with universal basic services frameworks, offers aspirational direction. Implementation requires policy instruments spanning education investment, income support mechanisms, and labor-market policies that facilitate occupational transitions.

Progressive taxation on AI-generated productivity dividends, directed toward universal basic income or universal basic services (healthcare, education, housing), provides redistributive counterweights to capital concentration.

Simultaneously, education systems require metamorphosis—emphasizing critical thinking, ethical reasoning, and human-centric capabilities complementary to AI capabilities rather than substitutable by them.

Energy symbiosis constitutes the fifth imperative, addressing AI's escalating energy demands through the diffusion of renewable infrastructure.

Elon Musk's orbital paradigms—space-based solar collectors transmitting energy via microwaves to terrestrial receivers—represent speculative solutions; a contemporary implementation requires scaling renewable energy and efficiency innovations.

Semiconductor research prioritizing energy efficiency over raw compute, distributed inference paradigms reducing central computation requirements, and model distillation techniques enabling the deployment of capable systems at a fraction of the training-phase energy requirements represent near-term pathways.

Simultaneously, grid infrastructure evolution, accommodating intermittent renewable sources through storage technologies and demand-response mechanisms, enables sustainable AI proliferation.

Conclusion

Davos 2026's AI convocation, orchestrated by visionaries from Huang to Harari, crystallized artificial intelligence's apotheosis as humanity's most puissant artifact—simultaneously representing humanity's highest technological achievement and its most consequential hazard.

The assemblage navigated tensions between technological enthusiasm and existential caution, between capitalist dynamism and humanistic solicitousness, between geopolitical competition and collaborative necessity.

The deliberations revealed convergence on AI's civilizational significance, but divergence over optimal trajectories. All acknowledged AI's transformative potential; disagreement centered on whether transformation would enhance human flourishing or precipitate precarious displacement.

Huang's optimism about infrastructural abundance conflicted with Tegmark's caution about alignment uncertainty; Nadella's productivity enthusiasm clashed with Whittaker's surveillance critiques; Musk's superintelligence timeline sparked Bengio's deceleration advocacy.

Yet amid disagreement, consensual imperatives emerged. Governance frameworks require establishment before capabilities exceed control capacity. Equitable diffusion demands deliberate institutional design to prevent technological feudalism.

Workforce adaptation necessitates proactive investment spanning education, income support, and labor market architecture. Energy systems require an evolutionary upgrade to accommodate AI's energetic appetite.

These imperatives, operationalized with political will and institutional coherence, offer pathways through which AI's transformative potential redounds to humanity's enhancement rather than its diminishment.

The onus resides in transmuting Davos rhetoric into institutional resolve, lest promissory potentials devolve into dystopian actualities.

The window for deliberate governance remains open but is narrowing; each quarter's capability acceleration compresses decision-making timeframes. Davos 2026 furnished intellectual scaffolding for navigating this inflection; implementation remains the province of political leadership, institutional designers, and civil society mobilization.

Whether artificial intelligence becomes humanity's most excellent good or gravest peril depends upon choices made in the years immediately consequent to this convocation.

The participants converged in Davos amid an epoch of unprecedented technological flux, acknowledging that civilization's trajectory hinges upon society's capacity to harness AI's potential while constraining its perils.

The historical judgment will assess whether this gathering precipitated transformative institutional design or merely catalogued anxieties while transformation proceeded unconstrained.

The calculus tilts toward the former, provided sufficient political capital mobilizes toward governance institutionalization, equitable diffusion, and ethical scaffolding in the years ahead.