Understanding Nvidia's Feynman Chip: How a 2028 Innovation Could Change AI Forever

Summary

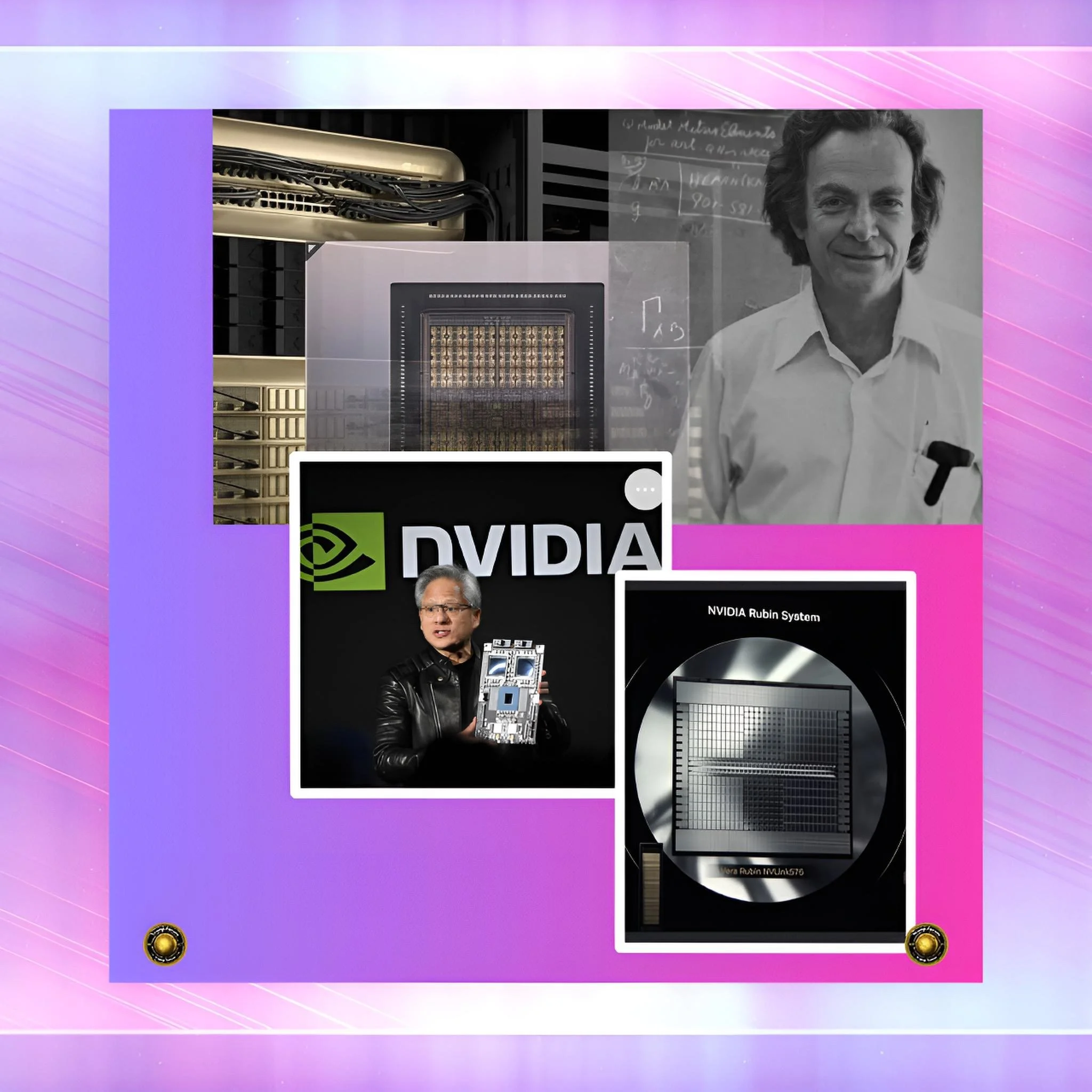

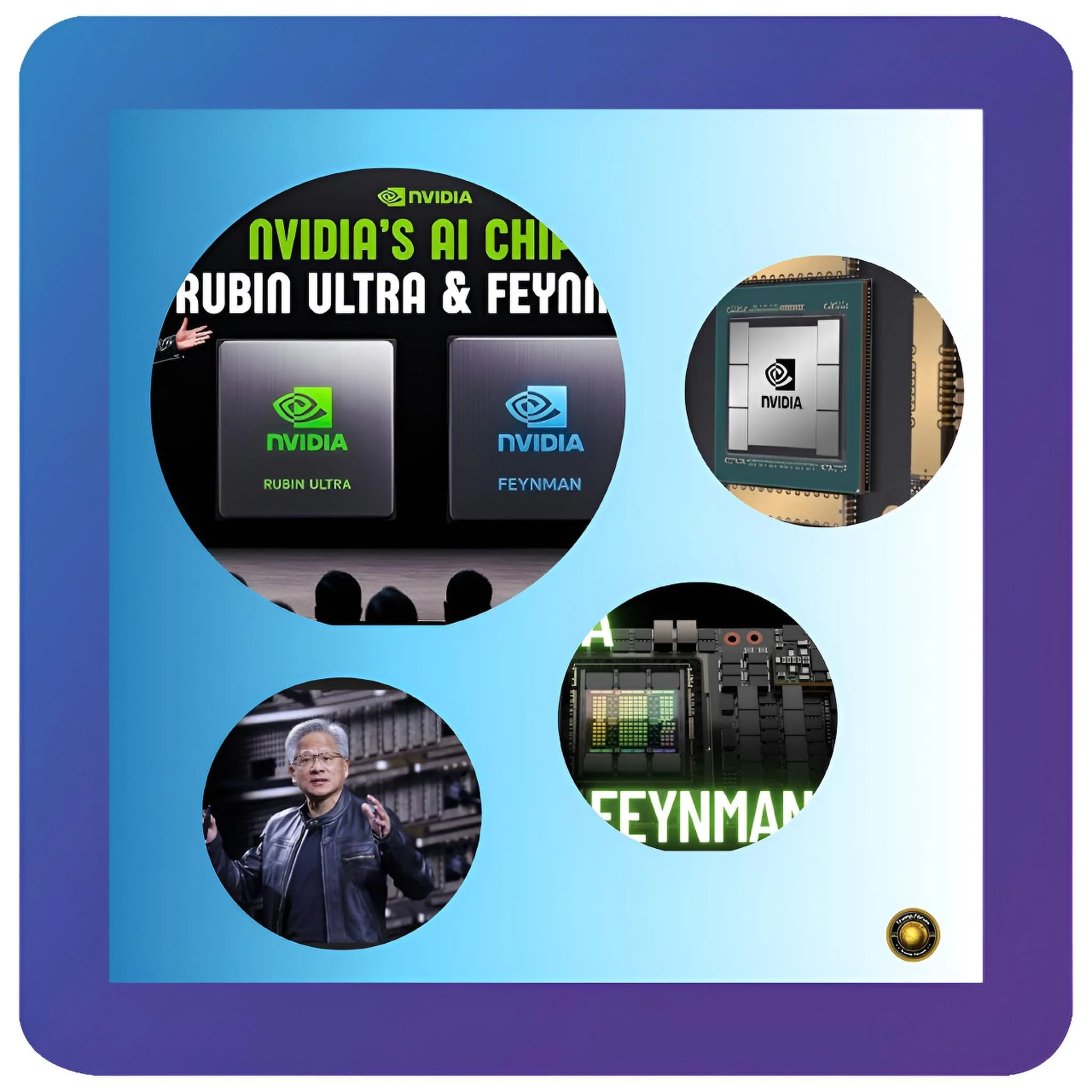

What Is Nvidia Doing with Feynman?

NVIDIA has announced a new computer chip design called Feynman that will arrive in 2028.

To understand why this is important, imagine you own a sports car with a potent engine.

That engine can theoretically reach tremendous speeds. But there is one problem: the fuel tank is tiny, and the fuel pump is slow. So even with that powerful engine, your car is limited not by engine power but by how quickly fuel can reach the engine.

That is precisely what is happening in the world of artificial intelligence computing right now.

NVIDIA's graphics processors are powerful at performing calculations, but they are limited by how quickly they can fetch data from memory.

The Feynman chip solves this problem by essentially building a better fuel-delivery system.

The Memory Problem in Simple Terms

Think about doing your job at a desk. Imagine your desk is tiny, but you constantly have to reach for a filing cabinet across the room to get documents. You can read documents very fast, but you waste a lot of time walking back and forth to the filing cabinet.

Your productivity is limited not by your ability to read but by how far away the filing cabinet is. This is the memory wall problem in computer chips.

Current AI computer chips like Nvidia's Blackwell can do trillions of calculations per second. But getting the information needed for those calculations takes a long time relative to how fast the chip can operate.

It is like your brain can process information incredibly fast, but your eyes can only see so much at once. The bottleneck is getting the information to the processor, not processing the data once it arrives.

This problem is especially problematic for AI inference, the process of using an already-trained AI model to answer questions or generate text.

When you use ChatGPT to write an email, that is inference. Your question goes to a computer with an AI model already stored in memory.

That computer has to repeatedly load pieces of the model from storage into the processor to answer your question.

The bigger the AI model, the longer this takes. For huge models with a trillion parameters (pieces of information), this can take a very long time.

What Makes Feynman Different

Feynman solves the memory wall problem by restructuring how the chip stores and accesses information.

Here is an analogy. Imagine you have a chef who prepares meals. Instead of storing all ingredients in a cold storage room down the hallway, the chef keeps frequently used ingredients in small containers on the counter.

Yes, there is less capacity on the counter than in cold storage, but the chef can work much faster because the frequently used ingredients are immediately available.

Feynman does something similar with computer memory.

It uses a technology called three-dimensional stacking to store frequently used data in ultra-fast memory directly on the chip itself, right next to the processors.

The rest of the model stays in the larger but slower external memory, like ingredients in the cold storage room.

When the chip needs data, it first checks the fast on-chip memory. If the data is there, it gets it instantly. If the data is not there, it goes to the slower external memory.

Because AI models repeatedly use specific data, this approach is incredibly efficient.

The Technology Behind Feynman

To make three-dimensional stacking practical, Feynman uses a manufacturing process called TSMC's A16.

This process node is made by Taiwan Semiconductor Manufacturing Company, the world's most advanced chip manufacturer.

What makes A16 special is something called backside power delivery.

Think of a multi-story building with electrical wiring. Usually, all the wiring runs up the walls and through the floors, taking up valuable space. Backside power delivery is like running all the electricity through a separate system behind the walls instead of inside the building. This frees up space inside the building for other uses.

For Feynman, moving power delivery to the back of the chip frees up enormous space on the front, where the actual computing happens.

This space allows engineers to build the three-dimensional memory stack directly on top of the processors. Without backside power delivery, this would be physically impossible because power lines would take up too much space.

Another significant change is that Feynman will use ultra-low precision arithmetic. Modern computers typically use 32 bits to represent a number, providing very high accuracy.

Feynman can use four-bit numbers for much of its work. This is like using a rougher map—you lose some detail but still get where you are going, and it is much faster and uses less energy.

Researchers have shown that AI models perform well with this lower precision, especially for inference.

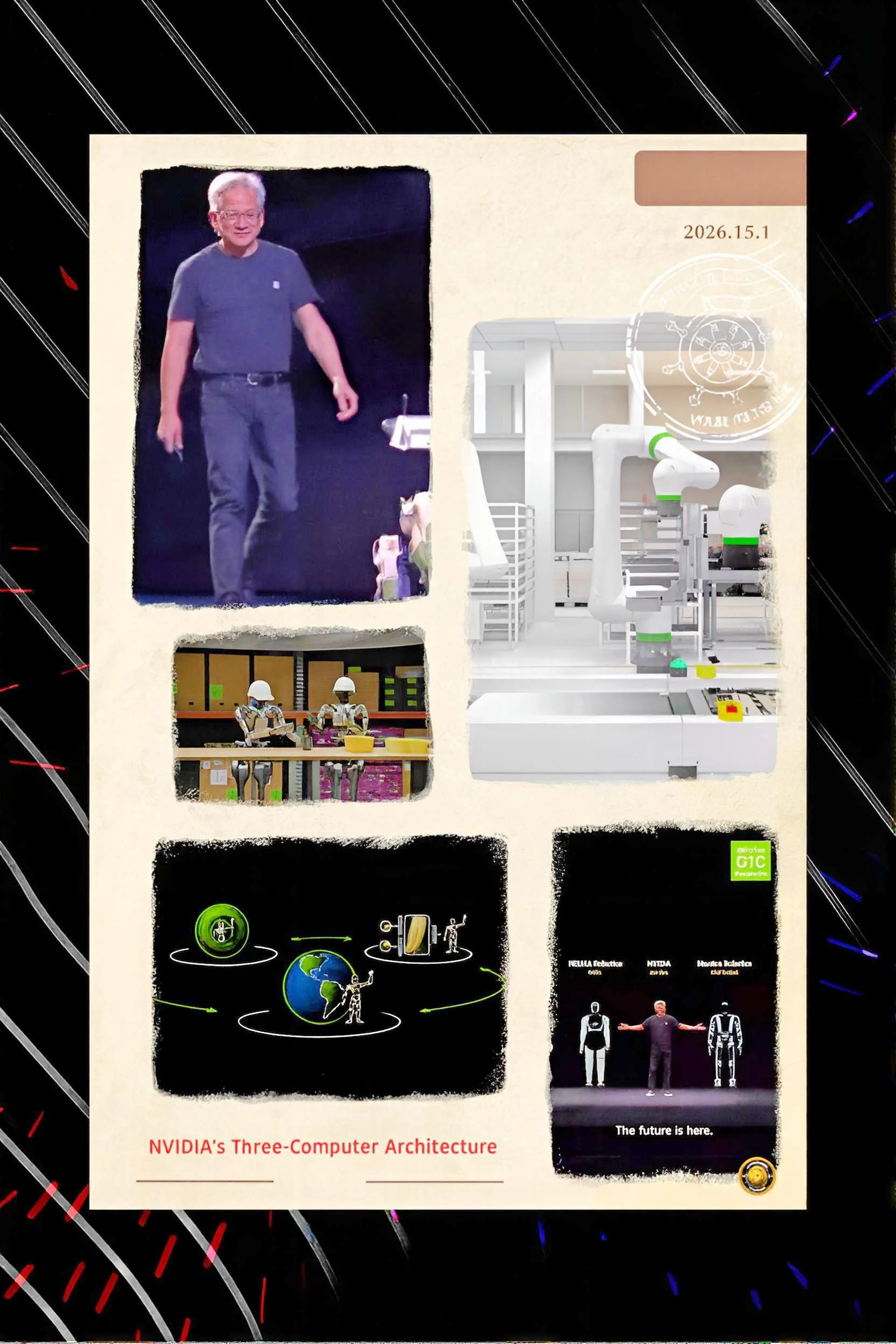

Why This Matters for AI Development

The efficiency improvements in Feynman are genuinely revolutionary.

Current systems lose as much as $5 billion annually on AI inference because running large models at scale is too expensive.

OpenAI, probably the most successful AI company in the world, loses money on inference.

Google, Amazon, and Microsoft all struggle with inference costs. Feynman could change this equation by reducing the cost of running an AI model by 10 times or more.

This cost reduction could enable entirely new classes of AI applications. Right now, only the largest companies and wealthiest users can afford to run AI for everyday tasks. If the cost drops by ten times, suddenly thousands of applications become economically viable.

Hospitals could use AI more extensively for diagnosis. Schools could use personalized AI tutoring. Small businesses could deploy AI customer service. The democratization of AI could happen not because of brilliant new algorithms but because Feynman made it affordable.

Competition and What Comes Next

AMD, Intel, and other companies are working on competing chips.

AMD's MI355X is actually quite competitive in inference efficiency. But Feynman arrives at a crucial moment: it uses the absolute newest manufacturing technology (TSMC A16) before most competitors. This gives Nvidia a one- to two-year performance advantage. By the time AMD and Intel produce similar designs, Nvidia will likely already be working on the next generation.

This pattern repeats across generations of chips. NVIDIA has dominated AI chips since 2016, and Feynman is likely to extend that dominance through 2030 and beyond. The company makes so much money from AI chips that it can invest heavily in research and development, making it very hard for competitors to catch up.

The Infrastructure Limits

One important caveat: Feynman's efficiency does not solve all problems. A full rack of Feynman systems might use 1.2 megawatts of electricity.

The United States needs tens of gigawatts of new data center power by 2028 to handle all the AI infrastructure being built. But the electrical grid is not expanding fast enough, and permitting for new power plants takes years. Water for cooling is also becoming scarce in some regions.

So even though Feynman is incredibly efficient, the actual deployment of these chips may be limited by infrastructure—power availability, cooling, and water—not by the chips themselves.

Conclusion

The Chip That Could Make AI Affordable: What Nvidia's Feynman Means for Your Future

This is an unusual situation. For the first time in the history of technology, the constraint on AI advancement might not be what engineers can invent but what the electrical grid can support.