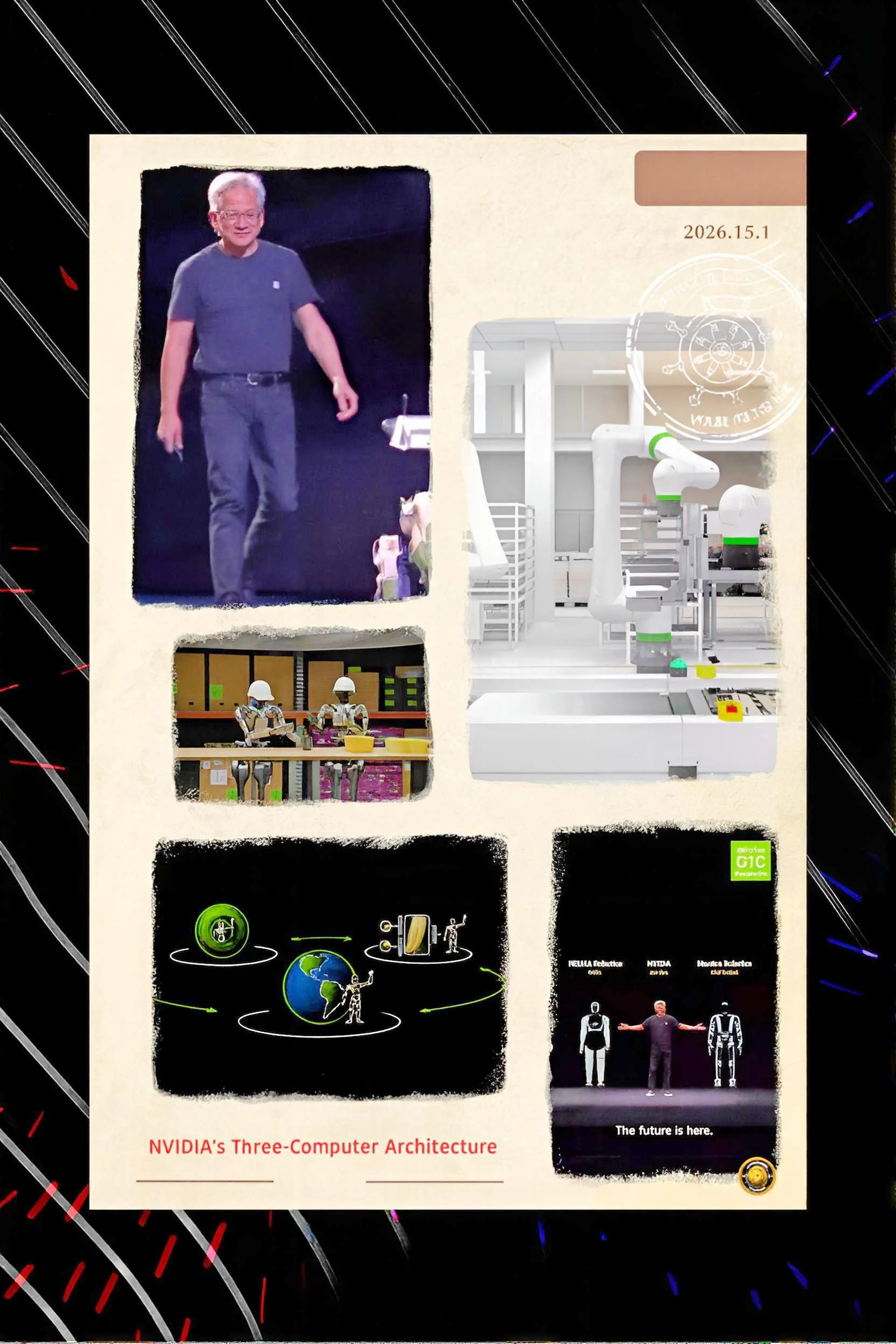

Understanding NVIDIA's Three-Computer Architecture for Physical AI Systems

Executive Summary

The convergence of artificial intelligence, robotics, and digital simulation technologies has emerged as a defining paradigm for the development of autonomous systems capable of perceiving, reasoning, and acting within complex physical environments.

NVIDIA has architected a comprehensive three-computer solution that fundamentally restructures the lifecycle of robotic system development, spanning training, simulation, and real-world inference.

FAF examination explores the technical architecture, theoretical foundations, contemporary implementations, and implications of this integrated ecosystem, which comprises advanced training supercomputers operating on the Blackwell architecture, sophisticated simulation environments built on the Omniverse platform with Cosmos world foundation models, and edge-deployed inference accelerators embodied in the Jetson AGX Thor processor.

Through detailed analysis of foundational technologies including the Isaac GR00T vision-language-action models, the Isaac Lab GPU-accelerated simulation framework, and the Cosmos Transfer synthetic data generation pipeline, this article establishes how NVIDIA's integrated approach addresses fundamental challenges in embodied artificial intelligence while simultaneously illuminating persistent constraints and future trajectories for the robotics industry.

Introduction

The emergence of physical artificial intelligence represents a significant evolutionary step in computing capabilities, extending artificial intelligence beyond digital domain constraints into the material world where robots must interact with dynamic, unpredictable environments.

Traditional approaches to robotic system development have relied upon extensive hand-coding, rigid programming paradigms, and prohibitively expensive real-world experimentation. This methodology constrains scalability, limits adaptability, and restricts the sophistication of tasks that autonomous systems can execute.

The fundamental challenge confronting contemporary robotics research involves bridging the gap between model training conducted in controlled laboratory environments and reliable performance in unstructured real-world operational contexts where sensor noise, environmental variability, and unexpected contingencies continuously emerge.

NVIDIA's proposed solution introduces a fundamentally different conceptualization of robotic system development architecture. Rather than treating training, simulation, and deployment as sequential, isolated phases, this integrated approach recognizes these phases as interdependent components of a unified computational pipeline.

The three-computer framework establishes specialized hardware and software stacks optimized for distinct operational phases, yet maintains seamless data flow and methodological consistency across the entire development lifecycle.

This architectural approach aligns with emerging consensus within the robotics research community regarding the necessity of large-scale simulation, synthetic data generation, and distributed training for advancing robotic autonomy beyond narrow, task-specific implementations toward generalized capabilities applicable across diverse embodiments and environmental contexts.

The significance of this architectural innovation extends beyond incremental performance improvements. The economic implications are substantial: according to NVIDIA's assessments, the addressable market for physical AI robotics spans approximately fifty trillion dollars when encompassing industrial automation, autonomous vehicles, and humanoid systems across global manufacturing infrastructure.

The technical barriers limiting commercialization of advanced robotic systems have historically centered upon three interconnected constraints: insufficient training datasets, limited simulation fidelity, and computational bottlenecks during real-world inference.

NVIDIA's three-computer solution directly addresses each constraint through specialized hardware components and integrated software ecosystems specifically engineered for the unique demands of physical AI development.

History and Current Status

The intellectual foundations underlying NVIDIA's three-computer architecture emerge from decades of computational research spanning multiple domains.

The fundamental insight driving this architectural approach derives from advances in deep learning, particularly the discovery that transformer-based architectures and vision-language models can be trained on large-scale diverse datasets to acquire capabilities that generalize across task contexts.

This principle, validated through successes in natural language processing and computer vision, suggested that similar methodologies could potentially extend to embodied artificial intelligence when combined with sufficient simulation infrastructure and synthetic data generation capabilities.

NVIDIA's entry into robotics development accelerated significantly following the March 2024 announcement of Project GR00T, designated formally as Generalist Robot 00 Technology.

This initiative represented a strategic commitment to developing foundation models specifically architected for humanoid robot systems, moving beyond the company's previous emphasis on providing hardware acceleration for robotics research conducted by external institutions.

The GR00T project introduced both the vision-language-action models that form the cognitive foundation of robotic autonomy and, concurrently, the supporting ecosystem of simulation and training infrastructure necessary to create such models at scale.

Contemporary trajectory demonstrates rapid technological maturation. The GR00T N1 foundation model released in March 2024 has evolved through incremental updates including the N1.5 variant, which demonstrated substantially improved environmental adaptation and object recognition capabilities.

The most recent N1.6 iteration, released at the Consumer Electronics Show in January 2026, incorporated advanced capabilities for whole-body control, enabling humanoid robots to execute simultaneous manipulation and locomotion tasks with substantially improved dexterity and environmental responsiveness.

The progression from N1 to N1.6 encompasses accumulation of approximately two years of development and architectural refinement, reflecting the intensive engineering effort required to advance foundation models from research prototypes to production-capable systems.

The supporting infrastructure evolved in parallel. The Isaac Sim simulation platform reached version 5.0 in 2025, incorporating substantially improved physics simulation accuracy, photorealistic rendering capabilities, and integration with the newly released Cosmos world foundation models.

Isaac Lab, originally released in mid-2024 as the successor to Isaac Gym, achieved version 1.2 by November 2024, establishing itself as the de facto standard framework for GPU-accelerated robot learning and policy training within the broader research ecosystem.

The orchestration of these components—foundation models, simulation platforms, and training frameworks—into a coherent integrated system represents the culmination of NVIDIA's strategic repositioning from hardware vendor to comprehensive robotics development platform provider.

Key Developments

The architectural foundation of NVIDIA's three-computer solution comprises three specialized subsystems, each addressing distinct phases of the robotic development lifecycle.

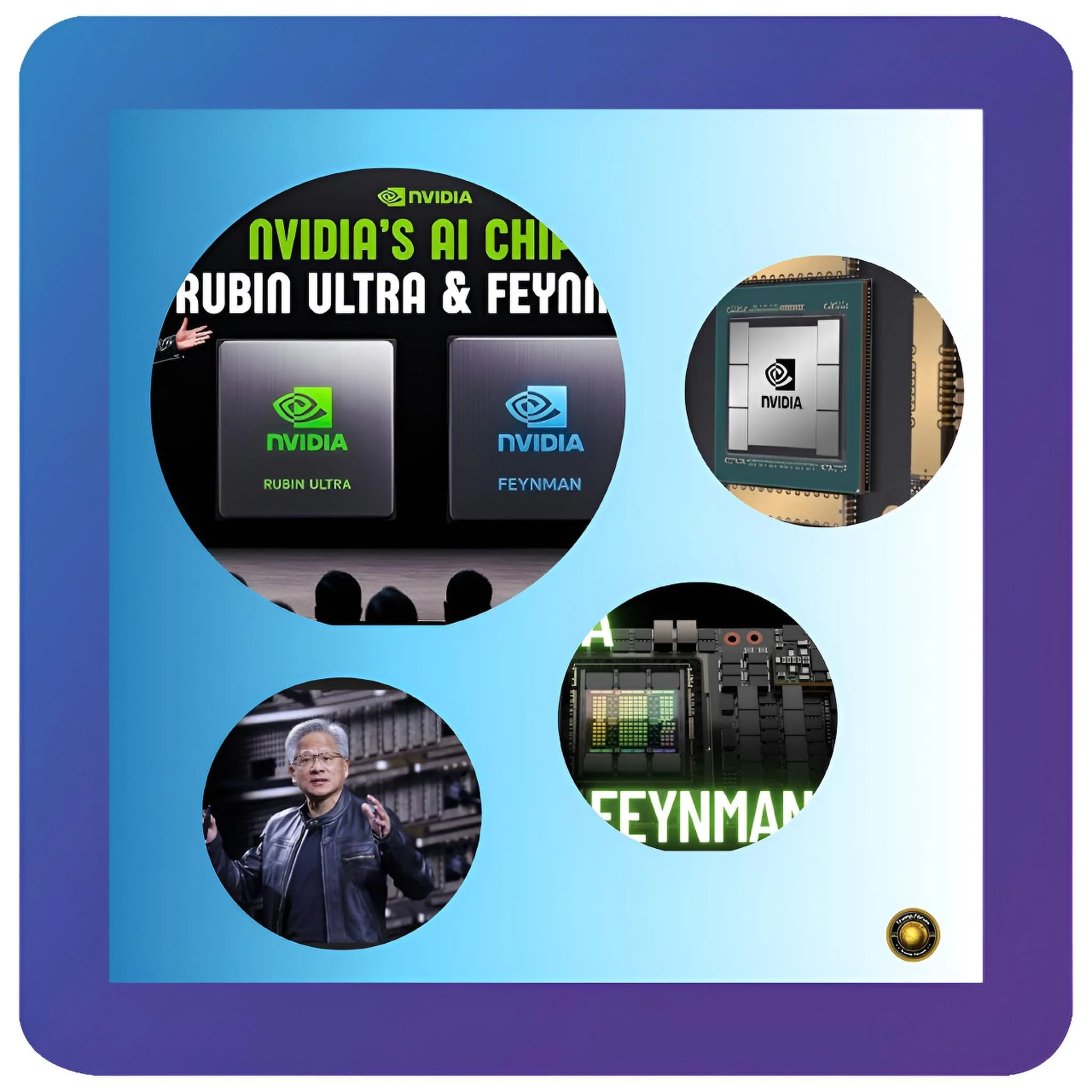

The training supercomputer represents the first component, exemplified by the NVIDIA DGX B200 system, which integrates eight Blackwell-architecture graphics processors delivering collective computational capacity of one hundred forty-four petaflops of FP4 floating-point performance.

The DGX B200 incorporates one thousand four hundred forty gigabytes of total graphics memory with sixty-four terabytes per second of memory bandwidth, specifications that enable training of extraordinarily large foundation models encompassing billions of parameters simultaneously.

The architectural significance of the Blackwell generation extends beyond raw performance metrics to encompass innovations in precision scaling and tensor computation, particularly through introduction of FP4 floating-point operations that enable computational density previously unachievable while maintaining numerical precision sufficient for high-quality model outputs.

The training supercomputers execute the resource-intensive phase of foundation model development, wherein models must ingest enormous datasets comprising internet-scale video content, captured robotic demonstrations, and synthetically generated trajectories.

The GR00T N1.5 development process exemplifies the scale of this training phase: using the Isaac GR00T-Dreams blueprint for synthetic data generation, NVIDIA research personnel generated seven hundred eighty thousand synthetic motion trajectories in eleven hours—a volume representing approximately nine continuous months of human demonstration data—and combined this synthetic data with real robot observations to achieve a forty percent performance improvement relative to models trained exclusively on real-world data.

This dramatic efficiency gain illustrates the multiplicative benefit of combining synthetic data generation at scale with appropriately architected foundation models.

The second component of the three-computer architecture comprises the simulation infrastructure, principally embodied in NVIDIA Omniverse with integrated Cosmos world foundation models running on RTX PRO Blackwell servers.

The Omniverse platform provides a collaborative simulation environment for constructing photorealistic digital twins of real-world environments, incorporating physics-accurate simulation through NVIDIA PhysX, sensor simulation including camera, lidar, and ultrasonic sensor models, and rendering of physically accurate light transport.

The architectural innovation enabling simulation-based training involves the integration of GPU-accelerated physics simulation with photorealistic rendering, enabling simultaneous high-speed parallelized simulation of thousands of robotic agents undergoing policy training while maintaining visual and physical fidelity comparable to real-world conditions.

The Cosmos ecosystem represents a recent major advancement in simulation capabilities, introducing world foundation models that address long-standing limitations in synthetic data generation and scene construction.

Cosmos Transfer 2.5, released in late 2025, accepts structured control inputs such as depth maps, segmentation masks, pose estimation data, and trajectory specifications, and generates corresponding photorealistic video sequences.

This capability fundamentally transforms the cost structure of robotic training by eliminating the necessity for painstakingly handcrafted or recorded training environments; instead, developers can rapidly generate diverse, environmentally varied training scenarios programmatically.

The distilled variant of Cosmos Transfer accomplishes the full synthetic data generation process in a single neural network inference pass, reducing what previously required seventy sequential processing steps to one, thereby enabling real-time interactive training workflows.

The third and final component comprises the edge-deployed inference hardware, centered upon the NVIDIA Jetson AGX Thor architecture specifically engineered for real-time robotic operation.

The Jetson AGX Thor integrates an NVIDIA Blackwell graphics processor with one hundred twenty-eight gigabytes of unified memory—a specification exceeding most contemporary discrete graphics cards by more than five-fold—within a power envelope of one hundred thirty watts.

This architectural constraint necessitated fundamental innovations in memory subsystem design, employing LPDDR5x unified memory architecture rather than conventional discrete graphics card designs, enabling simultaneous access from both processing cores with latency profiles suitable for real-time robotic control applications.

The Jetson AGX Thor delivers two thousand seventy FP4 teraflops of artificial intelligence computational capacity, representing a seven point five-fold performance increase relative to the previous generation Jetson AGX Orin, while simultaneously achieving three point five-fold improvement in energy efficiency.

Latest Facts and Concerns

The integration of NVIDIA's three-computer architecture into real-world robotic systems has progressed substantially beyond academic research demonstrations into commercial deployment phases.

Humanoid robots including the Boston Dynamics Atlas system, the 1X Neo Gamma, the Apptronik Apollo, and multiple Chinese-manufactured systems from Fourier Intelligence and XPENG have all adopted Jetson AGX Thor as the onboard compute foundation for real-time inference.

These deployments span diverse applications including industrial materials handling, autonomous facility maintenance, autonomous driving research, and advanced manufacturing contexts.

Hexagon's newly introduced AEON humanoid robot, developed explicitly in partnership with NVIDIA, represents a particularly illustrative case study; this system performs autonomous inspection, component scanning, and asset management across automotive, aerospace, manufacturing, warehousing, and logistics facilities, operating with autonomous perception and planning capabilities derived directly from the NVIDIA Isaac GR00T foundation models.

The Foxconn manufacturing facility currently under construction in Houston, Texas represents the most ambitious scaling effort for NVIDIA-based robotic systems to date. This facility will employ multiple autonomous humanoid robots for manufacturing NVIDIA's own graphics processors and accelerator systems.

The architectural approach for this deployment embraces full vertical integration, with facility design and operational workflows initially prototyped in simulation using NVIDIA Omniverse and the Mega industrial digital twin blueprint, then iteratively refined through reinforcement learning conducted within Isaac Lab before deployment to physical hardware.

This methodology demonstrates the maturity of the simulation-to-reality transfer process, though with important caveats regarding domain constraints and failure modes.

Contemporary concerns regarding the three-computer architecture cluster around several specific dimensions. The first concern involves the notorious sim-to-real transfer gap, a persistent challenge in robotic learning wherein policies trained in simulation frequently exhibit substantial performance degradation when executed on real hardware due to unmodeled or incompletely modeled physical dynamics.

Sources of this gap include inaccurate actuator dynamics, imperfect sensor models, inadequately simulated contact mechanics and friction properties, and differential response to environmental disturbances.

While NVIDIA's high-fidelity rendering and physics simulation substantially attenuate this gap relative to lower-fidelity alternatives, the gap remains a material constraint on practical deployment.

Domain randomization—deliberately introducing variation in simulated physical parameters across training episodes—partially mitigates this concern but introduces a secondary problem: if randomization ranges encompass values excessively distant from actual hardware characteristics, learned policies may exhibit brittle performance in real-world deployment.

A second significant concern involves the economic accessibility and democratization constraints inherent in the three-computer architecture.

The DGX B200 training supercomputer carries capital costs approaching five hundred thousand dollars for a single unit, placing this infrastructure within reach only of large corporations, research institutions with substantial funding, or well-capitalized startups.

While NVIDIA offers DGX Cloud as an alternative enabling access through subscription-based pricing, this arrangement shifts the economic burden toward high operational costs and perpetual reliance upon NVIDIA's cloud infrastructure.

The community of robotics developers stands at approximately two million engineers, a community only a tiny fraction of which possesses access to cutting-edge training infrastructure.

This concentration of resources risks creating a technological moat around advanced robotic capabilities, potentially constraining innovation and competitive dynamics in the robotics industry.

A third concern centers upon the dependency of the entire ecosystem upon data quality and diversity. The effectiveness of foundation models including GR00T derives fundamentally from training upon large, heterogeneous datasets incorporating real robot trajectories, human demonstrations, and synthetically generated scenarios.

The composition and quality of these datasets fundamentally determine the generalization capabilities of resulting models. While Cosmos Transfer enables synthetic data generation at unprecedented scale, the fidelity of synthetic data remains constrained by underlying simulation accuracy and the diversity of scenarios that developers choose to generate.

In domains involving complex contact dynamics, deformable objects, or fluid interactions, photorealistic rendering may exceed the fidelity of physical simulation, creating systematic biases in training data that degrade performance in real-world contexts.

Cause-and-Effect Analysis

The three-computer architecture produces observable effects across multiple dimensions of robotic system development economics, capabilities, and innovation trajectory. Causally, the availability of high-fidelity simulation infrastructure enables substantial reduction in the per-unit cost of generating training data.

Conventional data collection for robotic systems involves labor-intensive teleoperation, multiple trials, equipment wear, environmental disruption, and safety concerns.

A single hour of robotic demonstrations conducted in real-world contexts might require six to eight hours of human operator time when accounting for setup, equipment failures, safety protocols, and recovery procedures.

In contrast, simulation-based data generation can produce equivalent volumes of appropriately diverse scenarios in near-real-time, subject only to computational constraints.

This economic advantage amplifies significantly when combined with synthetic data generation through Cosmos Transfer, which can multiply diverse visual and environmental variations from a single simulated scenario, further reducing the effective per-unit cost of training data acquisition.

The availability of abundant high-quality training data causally enables larger, more sophisticated foundation models with improved generalization properties. The GR00T family of models demonstrates this causal relationship: initial GR00T N1 models, trained on more limited datasets, exhibited performance constrained to approximately seventy percent success rates on standardized manipulation tasks.

Subsequent iterations, trained on progressively larger and more diverse datasets augmented through synthetic data generation, achieved success rates exceeding ninety percent on equivalent evaluation benchmarks.

The causal mechanism underlying this improvement involves increased exposure to task variations during training, enabling learned policies to develop more robust feature representations and decision boundaries that generalize more reliably across distribution shifts between training and test contexts.

The causal linkage between simulation infrastructure and innovation velocity manifests through reduced iteration cycles for robotic system development. In traditional robotics workflows, algorithm refinement required sequential cycles of real-world testing, error analysis, parameter adjustment, and re-evaluation.

Iteration cycles spanning hours or days constrained the pace of algorithmic innovation. In contrast, workflows enabled by Isaac Lab enable thousands of parallel simulation episodes, with complete policy training cycles executing in hours rather than weeks.

This acceleration of iteration velocity directly translates to accelerated algorithmic advancement, enabling researchers to explore substantially larger design spaces and conduct more comprehensive ablation studies within fixed time constraints.

The integration of inference-optimized hardware in the form of Jetson AGX Thor causally enables real-time multimodal perception and decision-making at the edge.

Previous generation edge compute solutions, constrained to lower computational densities, required simplification of perceptual models or offloading of computationally intensive reasoning tasks to external servers, introducing network latency and operational complexity.

The Jetson AGX Thor's computational capacity and unified memory architecture enable simultaneous execution of vision-language models, motion planning algorithms, and low-level control loops within deterministic latency constraints suitable for real-time robotic operation.

This architectural capability causally drives feasibility of increasingly autonomous robotic systems capable of handling real-time contingencies without external computational support.

The availability of the complete ecosystem from training through deployment causally reduces technological barriers and capital requirements for organizations entering robotics development. Rather than requiring internal expertise spanning simulation infrastructure development, distributed training frameworks, edge optimization, and real-time inference optimization, organizations can leverage NVIDIA's integrated ecosystem, substantially reducing development timelines and capital investment required for initial prototype development.

This democratization effect, while constrained by capital costs of hardware infrastructure, has nonetheless catalyzed entry of robotics startups and established enterprises into application domains previously constrained by technical barriers.

Future Steps

The technological trajectory of NVIDIA's robotics ecosystem indicates multiple vectors of advancement under active development. The immediate near-term development priorities center upon expanding the breadth and sophistication of foundation models specifically tailored for distinct embodiments and task domains. While GR00T models focus upon humanoid morphologies, emerging challenges involve developing equivalent foundation models for quadrupedal locomotion, aerial platforms, manipulator arms, and hybrid configurations combining multiple morphologies.

The differentiation between morphologies involves not merely training distinct models but developing meta-learning approaches enabling rapid adaptation of foundation models to novel embodiments with minimal additional training.

A second development vector involves expanding the fidelity and scope of simulation infrastructure, particularly for domains involving complex dynamics inadequately represented in current physics engines.

Scenarios involving deformable objects, fluid dynamics, soft robotics, and contact-rich scenarios with significant mechanical compliance remain challenging to simulate accurately.

NVIDIA's announced development of the Newton physics engine, conducted in collaboration with Google DeepMind and Disney Research, specifically targets these limitations, incorporating differentiable physics simulation enabling gradient-based optimization of robotic policies.

This development potentially transforms the sim-to-real transfer gap through enabling physics-informed optimization approaches rather than relying exclusively upon domain randomization for bridging the gap.

A third development trajectory involves expanding international collaboration and ecosystem integration.

The announcement of Europe's first industrial AI cloud infrastructure developed in partnership with Deutsche Telekom and NVIDIA indicates plans to provide geographically distributed access to training and simulation infrastructure, potentially enabling regional clusters for robotics development across distinct geographic markets. Integration with Hugging Face's LeRobot framework establishes bidirectional coupling between NVIDIA's specialized robotics infrastructure and broader open-source machine learning communities, potentially accelerating innovation through knowledge exchange across domain boundaries.

The NVIDIA Rubin platform, announced at the Consumer Electronics Show 2026, introduces architectural innovations promising ten-fold reduction in inference token costs relative to the current Blackwell generation.

While Rubin development focuses primarily upon generative AI applications rather than specialized robotics workloads, the fundamental architectural innovations in compute efficiency and memory bandwidth directly apply to robotics inference scenarios, promising next-generation edge compute solutions enabling even more sophisticated autonomous behaviors within constrained power budgets.

Longer-term development vectors involve addressing the fundamental constraints limiting current approaches. The scaling laws governing foundation models suggest that model capabilities scale predictably with training compute, dataset size, and model parameters according to well-characterized mathematical relationships.

However, these scaling laws may not extend indefinitely; theoretical analyses suggest potential saturation effects beyond certain scales, potentially necessitating fundamentally novel approaches to advancing robotic autonomy.

NVIDIA's research investments in embodied artificial intelligence, reasoning-focused models such as Cosmos Reason, and the integration of language understanding with perception and action planning represent early explorations of these advanced methodologies.

Conclusion

NVIDIA's three-computer architecture represents a substantial advancement in the infrastructure underlying physical artificial intelligence development, addressing fundamental constraints that have historically limited scalability and economic viability of robotic system development.

The integrated approach spanning specialized training supercomputers, sophisticated simulation environments, and edge-optimized inference platforms establishes an ecosystem enabling organizations to develop increasingly capable autonomous systems while managing capital and operational complexity.

The contemporary commercial deployments across humanoid robotics, industrial automation, and autonomous vehicle contexts validate the fundamental technical approach while simultaneously illuminating persistent challenges requiring continued research attention.

The most significant limitation of current approaches involves incomplete closure of the sim-to-real transfer gap, a constraint that remains material for mission-critical applications requiring guarantees of performance bounds.

While domain randomization, photorealistic rendering, and physics-informed optimization substantially ameliorate this limitation, the gap remains nonzero and must be actively managed through careful system design, conservative performance bounds, and ongoing validation protocols.

Organizations deploying NVIDIA-based robotic systems must recognize the probabilistic nature of learned behaviors and implement appropriate safeguards and monitoring mechanisms.

The concentration of training infrastructure and associated capital requirements creates a technological moat potentially constraining innovation and competitive dynamics in the robotics industry. While subscription-based cloud access partially addresses this concern, the fundamental economics of training large foundation models remain accessible only to organizations with substantial financial resources.

Future development of smaller, more efficient models targeted toward specific task domains might partially address this constraint, though the trade-off between model capability and efficiency remains an open research question.

The trajectory toward increasingly autonomous, general-purpose robotic systems appears robust and technically sound, with NVIDIA's ecosystem providing substantial infrastructure support for this progression. The economic opportunity represented by robotics applications spanning manufacturing, logistics, healthcare, and exploration domains justifies continued intensive investment in advancing the underlying technologies.

The convergence of simulation technology, synthetic data generation capabilities, foundation models, and specialized edge hardware creates a genuine inflection point in robotic system capabilities, with implications extending across industrial, commercial, and research domains globally.