The Engineering Intelligence Behind Next-Generation Autonomous Machines

Summary

Sophisticated autonomous systems capable of sophisticated manipulation, navigation, and environmental reasoning necessitate computational infrastructures substantially exceeding conventional computing paradigms.

The contemporary robotics landscape experiences transformation through architectural innovations enabling machines to acquire generalizable capabilities transcending narrow task specifications.

NVIDIA's integrated technological ecosystem establishes computational foundations permitting rapid development, rigorous validation, and scalable deployment of robotic systems across heterogeneous industrial and commercial applications.

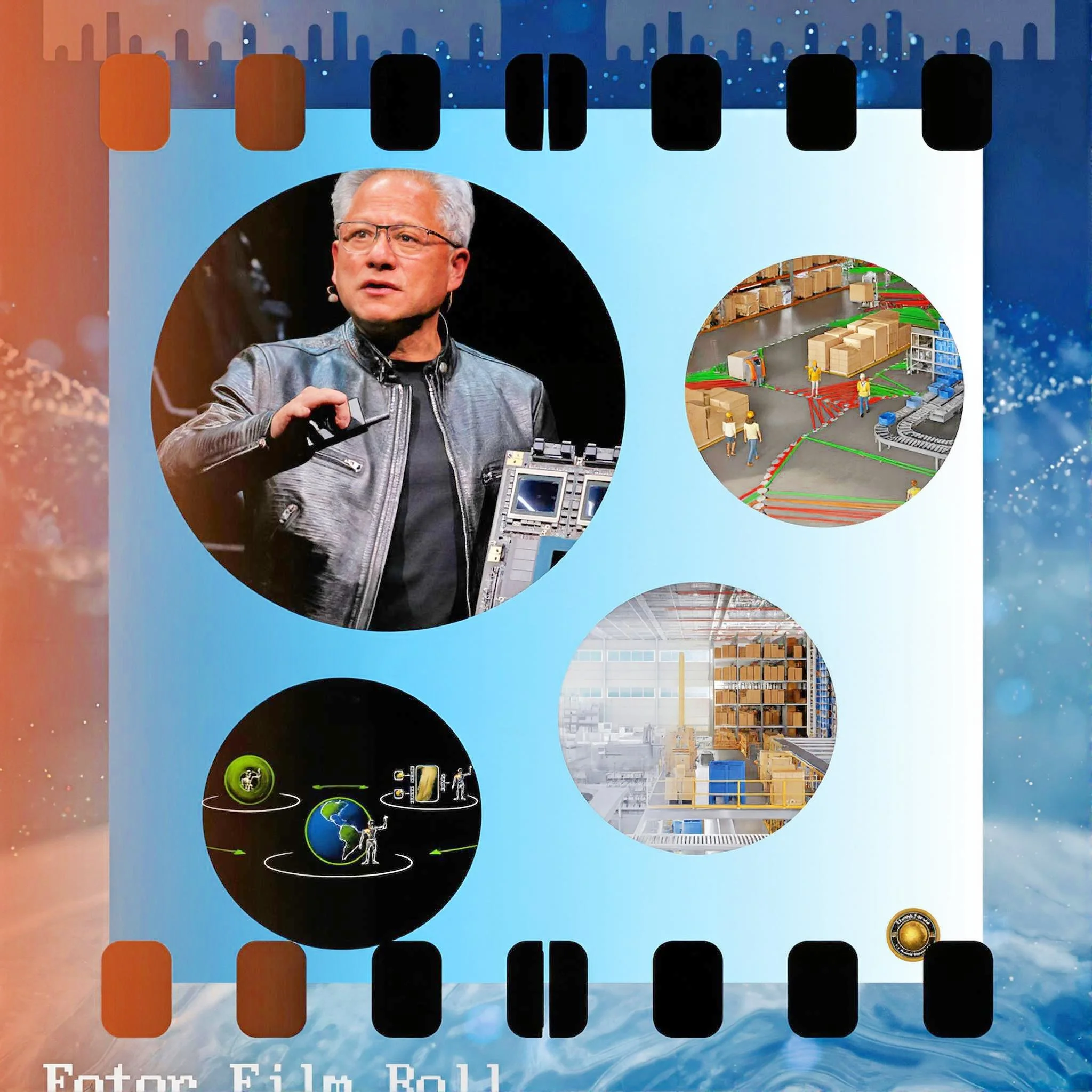

The fundamental innovation distinguishing contemporary approaches from preceding methodologies involves reconceptualization of the robotic development pipeline. Rather than sequential isolation of training, simulation, and deployment phases, integrated frameworks establish unified workflows maintaining methodological consistency and enabling seamless data interchange throughout development lifecycles.

This architectural coherence produces multiplicative efficiency gains cascading through each development phase, progressively accelerating innovation velocity while simultaneously reducing capital requirements for system development and deployment.

Training infrastructure represents the computational bedrock enabling development of sophisticated foundation models applicable across robotic embodiments.

The NVIDIA DGX B200 supercomputer aggregates computational resources previously distributed across multiple discrete systems, integrating eight graphics processing units based upon the Blackwell architecture within unified system architecture facilitating efficient multi-GPU training of extraordinarily large models.

The architectural specifications deliver one hundred forty-four petaflops of floating-point performance at FP4 precision while maintaining one thousand four hundred forty gigabytes of aggregate graphics memory accessible with sixty-four terabytes per second bandwidth. These specifications enable simultaneous training of models incorporating billions of parameters, a capability previously reserved for cloud-scale hyperscaler deployments.

The significance of these computational specifications extends beyond incremental performance improvements. The fifth-generation Blackwell Tensor cores incorporate innovations in micro-tensor scaling enabling four-bit floating-point arithmetic without unacceptable degradation in numerical precision.

This capability effectively doubles model size and computational throughput within equivalent memory constraints, producing substantial multiplier effects upon training efficiency and model sophistication. For organizations deploying such infrastructure, this advancement translates to faster training completion, reduced operational costs, and capability to experiment with larger, more sophisticated architectures within existing capital budgets.

Foundation models including the Isaac GR00T family exemplify the capabilities enabled through such computational infrastructure. These vision-language-action models combine multiple neural network subsystems enabling simultaneous processing of visual input, natural language instructions, and motor control outputs.

The GR00T architecture incorporates a dual-system cognitive structure inspired by principles of human cognition: a rapid reflexive system enabling quick reactive responses to immediate environmental stimuli, and a deliberative system supporting complex reasoning for multi-step task planning and adaptation to novel scenarios.

This cognitive architecture enables humanoid robots incorporating GR00T models to demonstrate substantially improved performance across diverse manipulation and locomotion tasks compared to alternative approaches.

Development of such sophisticated models requires training datasets of unprecedented scale and diversity. The conventional approach to data collection through real-world robotic demonstrations encounters immediate practical constraints: human teleoperators can demonstrate perhaps thousands of trajectories over months of effort, sensor wear accumulates gradually degrading data quality, and real-world experimentation introduces safety concerns and operational costs.

The NVIDIA Cosmos ecosystem addresses this constraint through algorithmic generation of photorealistic synthetic training data.

Abha Cosmos Transfer models ingest structured representations including depth maps, segmentation masks, pose estimation data, and trajectory specifications, generating corresponding photorealistic video sequences suitable for training perception models.

The technical sophistication enabling this synthetic data generation involves integration of multiple neural network architectures: conditional generative models for image synthesis, physics-aware deformation models for object motion, and sophisticated samplers ensuring diversity and avoiding mode collapse wherein generators produce limited variations repeatedly.

The efficiency gains from synthetic data generation prove remarkable in quantitative terms. Research conducted within NVIDIA demonstrated that seven hundred eighty thousand synthetic trajectories encompassing nine months equivalent human demonstration could be generated in eleven hours using the Isaac GR00T-Dreams blueprint.

When combined with smaller quantities of real-world data, synthetic data proved effective for improving model performance by forty percent relative to models trained exclusively on real data.

This efficiency represents not merely engineering optimization but fundamental reconfiguration of feasible training methodologies: whereas previous approaches treated real-world data as the primary training resource supplemented sparingly by simulation, contemporary approaches appropriately weight synthetic data contributions, recognizing their methodological advantages in controllability and scalability.

Simulation infrastructure completing the second computational phase enables rigorous policy validation and domain randomization experiments before real-world deployment. NVIDIA Omniverse establishes collaborative environments for designing photorealistic digital twins incorporating physics-accurate object interactions, sensor simulation, and configurable environmental parameters.

The integration of GPU-accelerated physics simulation through NVIDIA PhysX enables simultaneous simulation of thousands of robotic agents undergoing policy learning, compared to sequential episodes on traditional systems.

The convergence of rendering sophistication, physics accuracy, and sensor simulation enables policies trained in simulation to exhibit substantially improved transfer to real-world contexts.

The persistent sim-to-real transfer gap—the performance degradation observed when transferring learned policies from simulation to physical hardware—represents the most significant remaining constraint upon deployment.

Sources of this gap include imperfect actuator models, sensor noise incompletely represented in simulation, contact dynamics involving friction and deformation inadequately captured by rigid-body physics, and environmental disturbances unmodeled in the training environment.

NVIDIA's approach incorporates domain randomization, deliberately introducing parameter variation across training episodes so learned policies develop robustness to modeling inaccuracies.

However, randomization ranges must carefully balance breadth—encompassing sufficient variation to improve real-world transfer—against specificity to actual hardware capabilities, a constraint requiring domain expertise and iterative experimentation for effective implementation.

The deployment phase leverages the Jetson AGX Thor, an edge-deployed accelerator specifically engineered for real-time robotic inference.

The architectural innovation permitting simultaneous high performance and low power consumption involves fundamental redesign of memory subsystems, employing unified LPDDR5x memory accessible from both processing cores rather than conventional discrete graphics memory architectures.

This approach sacrifices peak memory bandwidth relative to datacenter-class solutions but enables substantially lower latency for small-batch inference operations typical in real-time robotic control.

The computational capacity of two thousand seventy FP4 teraflops enables simultaneous execution of vision-language models, motion planning algorithms, and low-level control loops within deterministic latency constraints necessary for stable robotic operation.

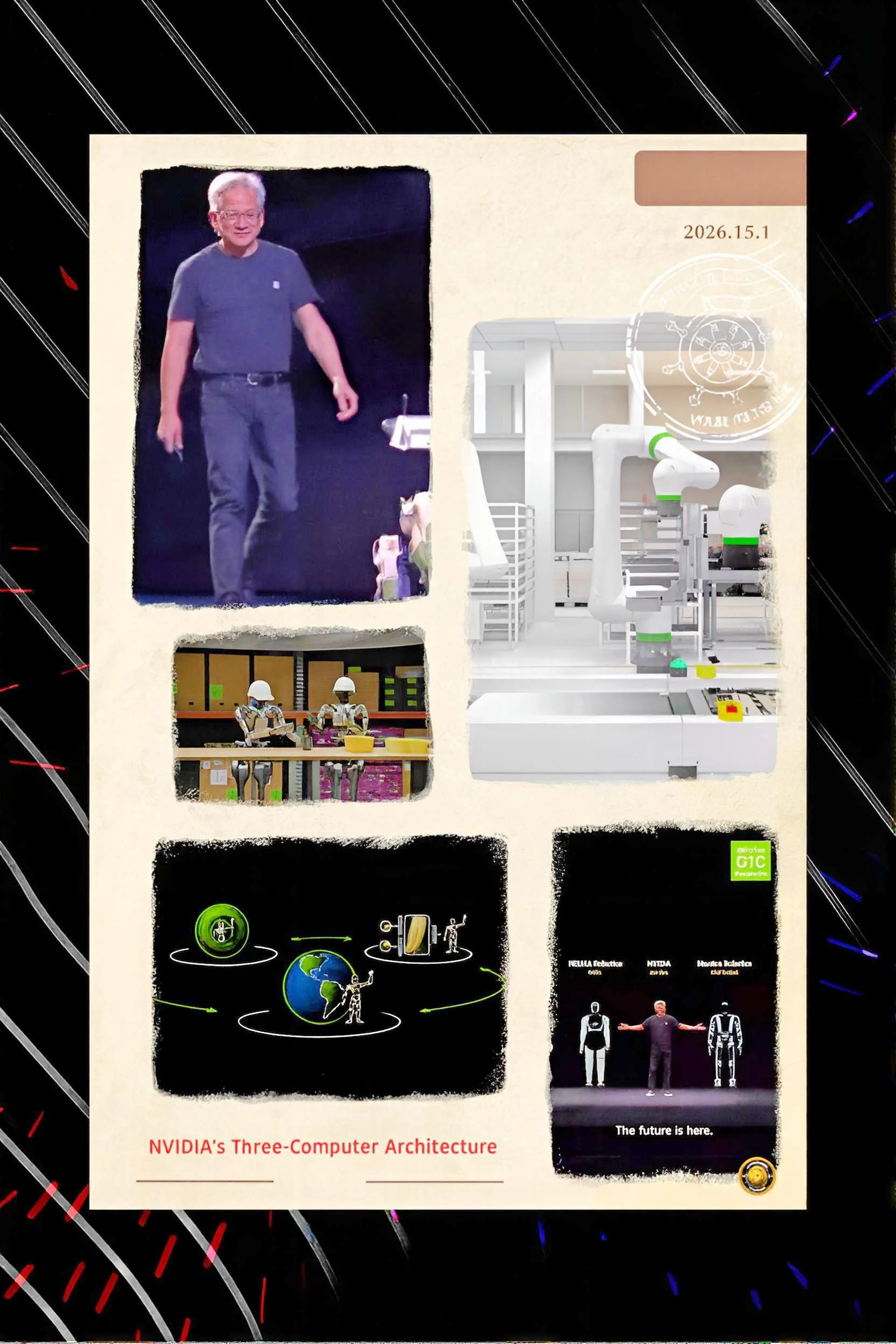

Industrial deployment patterns demonstrate maturation of the integrated ecosystem. Humanoid robots from Boston Dynamics, 1X Technologies, Apptronik, and Fourier Intelligence have adopted Jetson AGX Thor as primary onboard compute platforms, enabling autonomous operation in manufacturing facilities, logistics warehouses, and research environments.

Hexagon's AEON humanoid robot represents particularly sophisticated deployment, executing autonomous inspection of components and assets across automotive, aerospace, and manufacturing contexts.

The robot's autonomous operation depends upon integration of all three computational phases: foundation model development leveraging training supercomputers, policy validation within simulated industrial environments, and real-time inference executed on edge hardware.

Looking forward, technological development focuses upon expanding foundation model sophistication and breadth. Current GR00T models specialize in humanoid morphologies; emerging priorities involve developing equivalent foundation models for quadrupedal locomotion, aerial platforms, and specialized manipulators.

The NVIDIA Newton physics engine, developed collaboratively with Google DeepMind and Disney Research, targets improved simulation fidelity for complex dynamics including deformable objects and contact-rich scenarios, potentially enabling more sophisticated domain randomization approaches.

International expansion through infrastructure partnerships with Deutsche Telekom and others enables geographic distribution of training and simulation capabilities, supporting regional robotics ecosystems across distinct markets.

The trajectory toward increasingly capable autonomous systems appears well-established, sustained by substantial capital investment, growing ecosystem partnerships, and demonstrated commercial viability.

NVIDIA's three-computer architecture provides fundamental infrastructure enabling this progression while simultaneously establishing technological moats potentially constraining competitive dynamics.

Organizations leveraging these capabilities require careful attention to simulation fidelity, domain randomization effectiveness, and validation protocols before deployment into safety-critical applications.

The convergence of training supercomputers, sophisticated simulation, and specialized edge hardware creates genuine inflection point in autonomous system capabilities, with implications extending across industrial and commercial domains globally.