The Embodiment of Intelligence: How Physical AI Is Reshaping the Material Economy

Summary

The contemporary discourse surrounding artificial intelligence has been dominated by large language models and generative systems that operate exclusively within digital domains. Yet a more consequential transformation is underway, one that extends cognitive capabilities into the physical realm through the integration of sophisticated algorithms with mechanized systems.

Physical AI, or embodied intelligence, represents this paradigm shift—wherein machines acquire not merely the capacity to analyze and predict, but to perceive, manipulate, and navigate three-dimensional space with autonomous agency.

This development transcends incremental improvement in robotics; it constitutes a fundamental reconfiguration of how intelligence interfaces with material reality, promising to recalibrate industrial productivity, urban infrastructure, and human-machine collaboration across the global economy.

At its architectural core, Physical AI synthesizes multiple technological strata into a cohesive operational framework. Perception layers process heterogeneous sensor inputs—camera arrays, LiDAR point clouds, inertial measurement units, and acoustic signals—to construct real-time environmental models that capture geometric relationships, object identities, and dynamic states.

These representations feed into simulation engines that model physical dynamics, predicting how actions will propagate through the world according to principles of mechanics, kinematics, and causality. Decision-making algorithms evaluate these simulated futures against optimization objectives, selecting action sequences that maximize task completion while respecting safety constraints and resource limitations.

Control systems then execute these decisions through actuators, motors, and manipulators, closing the loop between cognition and physical effect. This architecture distinguishes Physical AI from conventional automation, which follows pre-programmed routines, by enabling adaptive responses to unstructured conditions and novel situations.

The economic implications of this technological convergence are already materializing in industrial contexts.

Manufacturing facilities are evolving from collections of isolated machines into integrated cyber-physical systems where digital twins orchestrate production flows. These virtual replicas, continuously synchronized with operational data, enable engineers to simulate process modifications, predict bottlenecks, and optimize configurations without disrupting physical operations.

Automotive manufacturers have deployed autonomous vehicle technology within factory environments, allowing newly assembled cars to self-navigate from production lines through testing protocols to finishing areas—eliminating human transport labor while improving throughput precision.

Warehousing operations have integrated AI-powered robotic arms with adaptive grasping capabilities that handle variable package dimensions and materials, reducing damage rates and increasing sorting velocity. These implementations demonstrate that Physical AI delivers measurable returns not through replacement of human labor alone, but by enabling operational modalities that were previously economically infeasible.

The trajectory of adoption follows a predictable pattern of technological diffusion, though accelerated by contemporary computational abundance. Initial deployments concentrate in controlled environments where variability is constrained and safety risks are manageable—industrial floors, logistics hubs, and medical facilities with structured protocols. As systems accumulate operational experience and training datasets expand, capabilities generalize to more complex scenarios.

Edge computing architectures facilitate this progression by processing sensor data locally, reducing latency to millisecond scales essential for real-time control while maintaining data sovereignty.

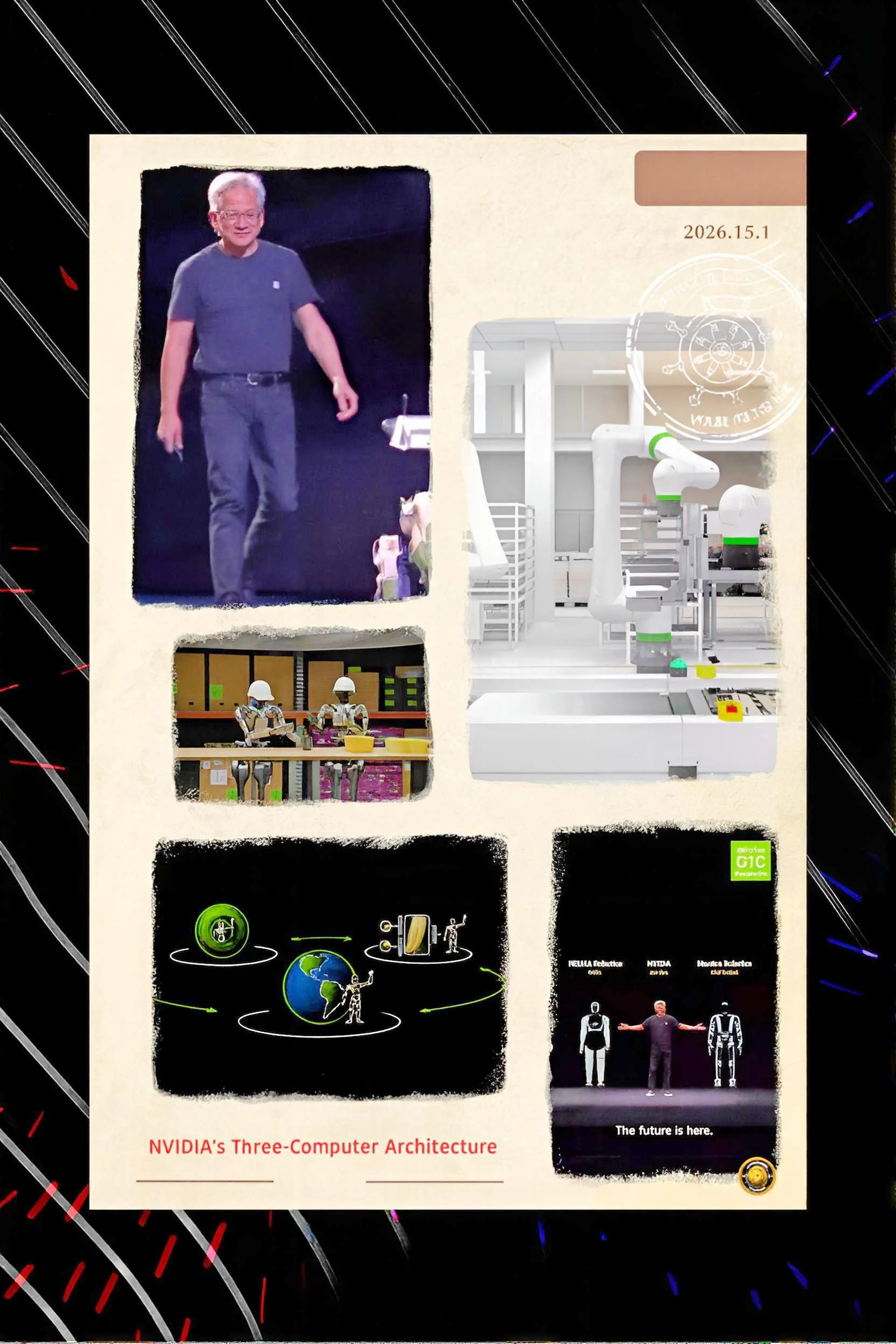

Simulation platforms like NVIDIA Omniverse generate synthetic training data at scale, allowing models to learn physical dynamics without costly real-world experimentation.

This combination of edge inference and simulation-driven training creates a feedback loop wherein deployed systems continuously improve through operational experience while new capabilities are validated in virtual environments before physical deployment.

Yet the path toward ubiquitous Physical AI confronts substantial challenges that extend beyond technical hurdles. Capital requirements present formidable barriers, as enterprises must invest in robotic hardware, sensor networks, and edge computing infrastructure—expenditures that dwarf software licensing costs and demand multi-year amortization cycles.

Data integration complexities arise from the heterogeneity of sensor streams and the need to fuse information across temporal and spatial scales, requiring sophisticated architectures that many organizations lack the expertise to implement.

Workforce transformation poses cultural rather than merely technical difficulties; employees must adapt to collaborative relationships with autonomous agents, developing supervisory skills and learning to interpret machine decisions without surrendering professional judgment.

Safety standards remain embryonic, particularly for mobile autonomous systems operating alongside humans. Current regulations, designed for stationary industrial equipment, inadequately address scenarios where robots weighing hundreds of pounds navigate shared spaces with unprotected workers. Accountability frameworks are similarly underdeveloped, leaving unresolved questions of liability when autonomous systems cause injury or property damage.

The psychological dimensions of human-robot interaction introduce additional complexity. Humans exhibit a tendency to anthropomorphize machines with humanoid forms, projecting expectations of emotional intelligence and conversational ability onto platforms designed primarily for mechanical tasks. This cognitive bias can reduce safety vigilance and create disappointment when robotic capabilities fail to match imagined potential.

Conversely, robots that operate with superhuman speed and precision may induce anxiety among workers who perceive their livelihoods as threatened. Designing effective human-machine collaboration requires attention not only to technical performance but to the socio-emotional context of deployment, ensuring that automation augments human capabilities rather than simply displacing labor.

Looking forward, the evolution of Physical AI will likely bifurcate along two complementary paths. In the near term, specialized robotic platforms with domain-specific process knowledge will dominate industrial applications, delivering superior reliability and precision compared to general-purpose humanoid forms. These systems will integrate tightly with digital twin infrastructure, creating AI-native factories where virtual models continuously optimize physical operations through closed-loop learning.

Over longer horizons, foundational world models trained through self-supervised learning of physical causality will reduce dependency on manually engineered training data, enabling more generalized autonomous capabilities.

This progression will necessitate parallel development of governance frameworks that address safety certification, liability allocation, and workforce transition support.

The organizations that thrive will be those that treat Physical AI not as a drop-in technology replacement but as an opportunity to fundamentally reimagine operational models, creating cyber-physical systems where human creativity and machine precision amplify each other.

The transformation extends beyond factory walls into urban infrastructure, healthcare delivery, and domestic environments. Autonomous drones inspect bridges and pipelines, reducing infrastructure maintenance costs while improving public safety.

Surgical robots assist with procedures requiring precision beyond human capability, while rehabilitation robots adapt therapy protocols to individual patient progress. Smart city systems optimize energy distribution, traffic flow, and waste management through distributed sensor networks and autonomous decision-making.

In each domain, Physical AI enables new capabilities rather than merely automating existing processes, suggesting that its ultimate impact will be measured not in jobs displaced but in services previously unimaginable that become economically viable.

The critical insight is that Physical AI does not simply add intelligence to machines; it creates a new category of socio-technical system where the boundaries between digital and physical, human and machine, become permeable.

Success requires more than technical prowess—it demands organizational redesign, workforce development, and thoughtful governance. As these embodied intelligences proliferate, society must establish norms and institutions that ensure they serve human flourishing rather than simply optimizing narrow metrics of productivity.

The question is not whether Physical AI will transform the material economy, but whether we will shape that transformation toward equitable and sustainable ends.