The Great AI Divergence: How Safety Became OpenAI's Blind Spot and Anthropic's Competitive Weapon

Executive Summary

Divergent Philosophies, Convergent Markets: OpenAI and Anthropic's Competing Visions for Artificial Intelligence Advancement

The global artificial intelligence landscape has undergone profound transformation since 2021, marked by the emergence of two fundamentally different organisational philosophies encapsulated by OpenAI and Anthropic.

Whilst both entities aspire to advance artificial general intelligence, their approaches to achievement differ substantially—diverging along axes of temporal urgency, safety prioritisation, governance structure, and customer targeting.

OpenAI, founded in 2015, champions rapid innovation coupled with integrated safety mechanisms, pursuing mass-market adoption through consumer subscriptions and API access.

Conversely, Anthropic, established in 2021 by former OpenAI researchers including Dario and Daniela Amodei, prioritises rigorous safety research before scaling, targeting enterprise customers through carefully engineered governance structures and ethics-embedded model architectures.

This fundamental philosophical fork has produced not merely competitive differences in product capability, but rather an entirely distinct market segmentation whereby OpenAI commands the mass consumer base whilst Anthropic consolidates enterprise trust.

By January 2026, ChatGPT maintained 68 percent market share with 800 million weekly active users, whilst Claude captured approximately 2 to 3.5 percent market share with 30 million monthly active users.

However, Anthropic's revenue trajectory and enterprise concentration—with 80 percent of its annual recurring revenue sourced from approximately 300,000 business customers—demonstrates the commercial viability of prioritising safety and reliability over speed and scale.

FAF analysis examines the historical origins of this divergence, the contemporary competitive positioning of both organisations, and the implications for artificial intelligence governance, corporate structure, and the future trajectory of transformative AI systems.

Introduction

When Safety Champions and Speed Advocates Build Competing Futures

The trajectory of artificial intelligence development has historically oscillated between two competing imperatives: the acceleration of capability advancement and the mitigation of associated risks. This oscillation has manifested most dramatically in the strategic choices undertaken by two preeminent organisations: OpenAI and Anthropic.

The decision by Dario Amodei, a senior researcher at OpenAI, to depart in December 2020 and establish Anthropic with 14 additional colleagues represents far more than a typical personnel transition within the technology sector. Rather, it constitutes a fundamental philosophical schism regarding the appropriate temporal pacing, safety prioritisation, and governance structures for developing increasingly powerful artificial intelligence systems.

The emergence of this schism has produced consequences extending beyond corporate competition into the domains of regulatory policy, enterprise adoption patterns, and societal expectations concerning algorithmic governance.

The stakes underlying this divergence are not merely commercial but existential in nature. The development of artificial general intelligence—systems exhibiting human-level reasoning capacity across diverse domains—remains contingent upon resolving fundamental questions concerning alignment, interpretability, and controllability.

Whether these challenges are best addressed through rapid iteration in production environments or through rigorous research before deployment has emerged as the central strategic question differentiating these organisations.

This analysis examines the philosophical foundations, contemporary manifestations, and future implications of this divergence.

Historical Origins and Foundational Philosophies

The Schism That Spawned Anthropic—Why a Safety Researcher Left OpenAI to Build a Different Kind of AI Company

The emergence of Anthropic did not represent a standard competitive venture competing directly against OpenAI's existing products. Rather, it originated from substantive disagreement concerning the appropriate trajectory of artificial intelligence development.

Dario Amodei, during his tenure as Vice President of Research at OpenAI, authored numerous memos articulating concerns regarding the velocity of capability scaling without commensurate advancement in safety research and alignment techniques. His contributions to OpenAI's research portfolio were substantial: he participated in the development of GPT-2 and GPT-3, pioneered work on Reinforcement Learning from Human Feedback (RLHF), and contributed to early alignment research including debate and amplification techniques designed to enhance interpretability.

However, this accumulated influence within OpenAI proved insufficient to redirect the organisational trajectory toward the safety-prioritising approach Amodei advocated. By his own testimony, this frustration did not stem from opposition to commercialisation—Amodei had participated directly in the commercial development of GPT-3—nor from objections to Microsoft's partnership. Rather, his departure reflected a conviction that establishing a new organisation embodying safety-first principles from inception would prove more productive than attempting institutional reform from within an existing structure increasingly oriented toward rapid capability advancement and market penetration.

The founding structure of Anthropic reflected this safety-first philosophy with institutional rigour. Rather than adopting the capped-profit model employed by OpenAI, Anthropic structured itself as a Public Benefit Corporation (PBC) supported by a Long-Term Benefit Trust (LTBT).

This governance mechanism grants the board fiduciary obligation both to shareholders and to a broader societal mission—specifically, ensuring that transformative artificial intelligence systems benefit humanity broadly rather than concentrating value among shareholders. This structural choice embedded ethical considerations into the organisational fabric itself, creating institutional mechanisms resistant to prioritising short-term profitability over long-term safety considerations.

OpenAI's founding philosophy, by contrast, centred upon democratising access to artificial intelligence whilst simultaneously maintaining sufficient safety guardrails to prevent misuse. Sam Altman, as chief executive, articulated this philosophy through the airplane analogy: artificial intelligence systems must be simultaneously capable and safe, with these characteristics existing in integrated tension rather than opposition. Safety, in this framework, constitutes one component of a holistic system design rather than a prerequisite constraint upon capability advancement.

This orientation produced a development philosophy characterised by iterative deployment, with safety mechanisms integrated throughout the product lifecycle rather than concentrated in pre-release phases.

Constitutional Artificial Intelligence and the Safety Architecture Divergence

Embedding Ethics Before Deployment—How Anthropic's Constitutional AI Challenges Industry Assumptions About Safety and Capability Trade-Offs

The most significant technical manifestation of these philosophical differences emerged through Anthropic's development of Constitutional Artificial Intelligence (CAI), a novel training methodology fundamentally distinct from conventional approaches employed within the industry.

Rather than relying exclusively upon Reinforcement Learning from Human Feedback (RLHF)—the approach popularised by OpenAI and adopted widely throughout the artificial intelligence sector—Constitutional AI embeds ethical principles directly into model training processes through synthetic data generation based upon explicit constitutional guidelines.

The constitutional framework draws ethical principles from diverse sources including the United Nations Declaration of Human Rights, Apple's Terms of Service, and Anthropic's proprietary research into AI alignment.

These principles are translated into natural language rules specifying both permissible and impermissible content categories.

These rules then generate synthetic training examples which guide the model's behaviour during the learning process. Importantly, this approach enables training of safety characteristics without requiring human annotation of harmful content—a significant efficiency improvement whilst simultaneously reducing human exposure to potentially disturbing training materials.

The empirical results of Constitutional AI training have produced what Anthropic terms a Pareto improvement: models trained via Constitutional RL demonstrate simultaneously greater helpfulness and greater harmlessness compared to models trained exclusively via RLHF. This apparent paradox—that adding safety constraints could enhance rather than diminish helpfulness—challenges the conventional industry assumption that safety and capability exist in zero-sum opposition.

Contemporary testing of Constitutional Classifiers, which extend this framework to real-time detection and intervention at the token level, has demonstrated 95 percent success rates against adversarial jailbreak attempts.

OpenAI's approach to safety, whilst incorporating multiple mechanisms, emphasises deployment-time interventions and continuous monitoring rather than constitutional principles embedded in training.

This orientation reflects a distinct belief: that safety mechanisms must evolve alongside capability advancement through iterative interaction with real-world deployment scenarios.

The theoretical basis underlying this approach posits that anticipated risks prove less tractable than risks encountered through operational experience, rendering rapid iteration superior to pre-deployment comprehensive planning.

Current Competitive Positioning and Market Segmentation

Dominance by Division—ChatGPT Controls Consumers While Claude Captures Enterprise Trust

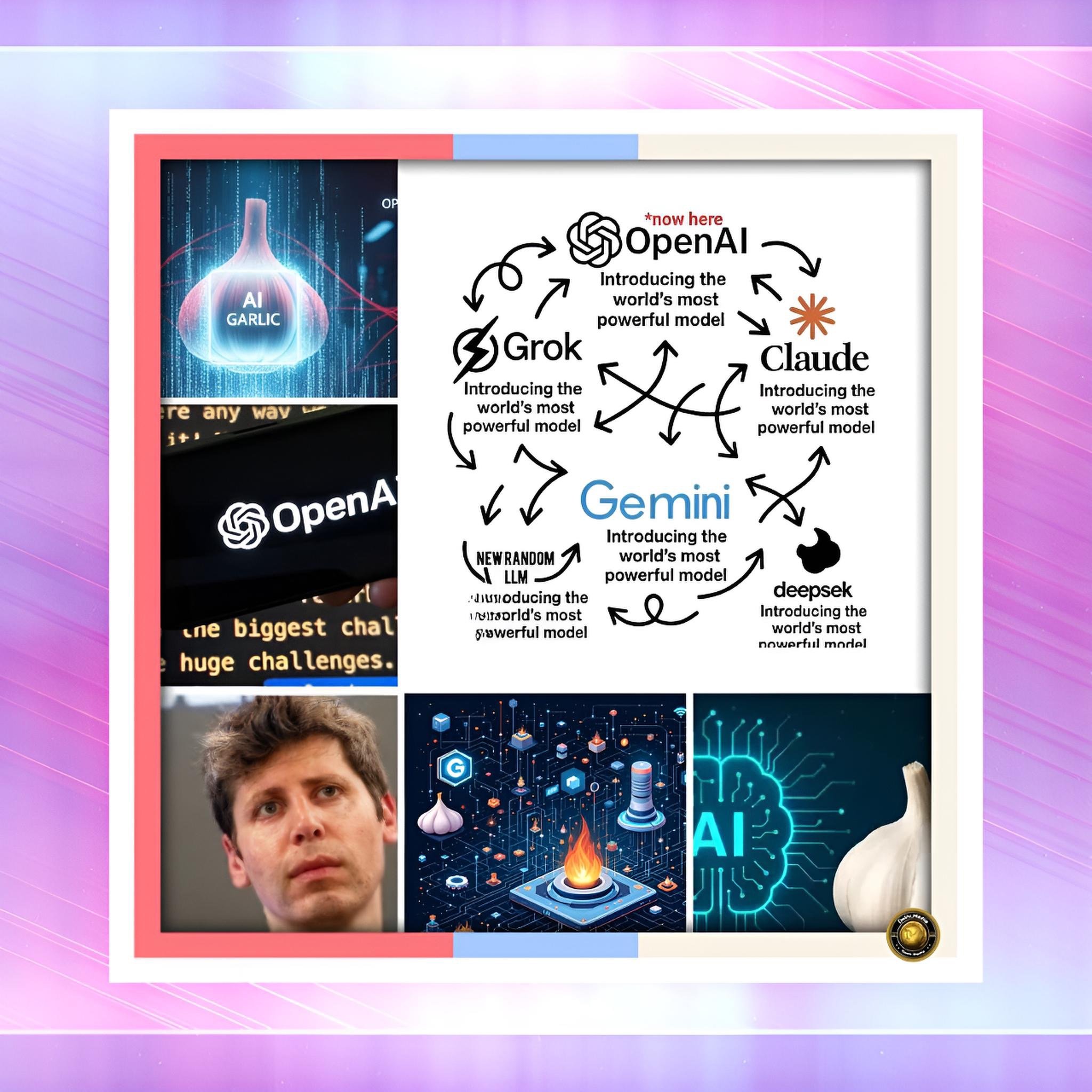

By January 2026, the competitive positioning of both organisations reveals market segmentation along unexpected dimensions.

ChatGPT, despite declining market share from 87.2 percent in January 2025 to 68 percent by January 2026, maintains singular dominance in consumer-facing artificial intelligence adoption. The platform processes over 1 billion searches weekly, handles 6 billion tokens per minute through its API, and commands 800 million weekly active users. This user base comprises predominantly consumer-facing audiences, content creators, startups, and general-purpose users seeking assistance with writing, coding, brainstorming, and analysis.

The platform's revenue has achieved $10 billion in annual recurring revenue as of June 2025, with projections targeting $125 billion in annual revenue by 2029.

Claude's market positioning, by contrast, reflects fundamentally different strategic priorities. With approximately 30 million monthly active users and 2 to 3.5 percent market share, Claude exhibits substantially lower consumer penetration than ChatGPT. However, this positioning obscures the profound revenue concentration and customer depth within the enterprise segment.

Anthropic's revenue structure reveals 80 percent derivation from approximately 300,000 business customers, compared to OpenAI's reliance upon consumer subscriptions for 60 percent of revenue.

Anthropic's annual recurring revenue reached approximately $2.2 billion in 2025, with projections for $9 billion by year-end 2025 and $20 to $26 billion by 2026.

Most significantly, Claude Code—a code-generation tool launched in May 2025—achieved $400 million in annualised run-rate revenue by July 2025, scaling to $500 million by December 2025, with usage growing more than tenfold in three months.

The customer base composition further illuminates this segmentation.

70% of Fortune 100 companies utilise Claude in some capacity, including organisations such as Novo Nordisk, Bridgewater Associates, Stripe, and Slack. These entities have consciously selected Claude despite ChatGPT's greater capability, recognising that reliability, interpretability, and compliance alignment justify accepting lesser raw performance. Approximately 84,000 developers have constructed applications upon Claude, with 3,200 software-as-a-service products and internal tools powered by Claude.

The platform processes 25 billion API calls monthly, with 45 percent originating from enterprise customers—a concentration of enterprise utilisation substantially exceeding industry norms.

Risk Tolerance Differentiation and Governance Implications

The Safety Tax That Pays Dividends—Why Regulated Industries Choose Claude Despite Lower Capability

The market segmentation reflects fundamentally distinct risk tolerance profiles. Consumer applications and startup environments can accommodate greater error rates, hallucination frequencies, and compliance ambiguity provided that capabilities exceed competitive offerings and user experience remains engaging.

Conversely, regulated industries including financial services, healthcare, and public administration require substantial confidence in interpretability, compliance adherence, and freedom from outputs violating regulatory frameworks.

ChatGPT presents substantial compliance risks within regulated environments. The platform processes all content through United States infrastructure, creating conflicts with European data protection regulations and creating legal exposure under the General Data Protection Regulation for any organisation processing European resident personal data through ChatGPT.

The Samsung incident of 2023, wherein semiconductor division engineers inadvertently disclosed confidential source code through ChatGPT, catalysed widespread organisational apprehension regarding intellectual property protection and trade secret exposure. Contemporary research by EY and regulatory bodies identifies hallucination—the production of plausible-sounding but fabricated information—as particularly problematic within low-fault-tolerance industries including legal services, financial advisory, and medical diagnosis.

Anthropic's governance structure and safety architecture address these concerns through systematic design. Constitutional AI embeds interpretability throughout the model architecture rather than treating it as a post-hoc compliance layer. The constitutional framework provides explicit documentation of ethical principles guiding model behaviour, facilitating regulatory compliance demonstration and governance committee evaluation. Enterprise customers receive detailed explanations of model limitations, constitutional principles, and safety mechanisms—transparency enabling informed deployment decisions.

The Public Benefit Corporation structure creates legal fiduciary obligations ensuring that safety considerations cannot be subordinated to profit maximisation through conventional shareholder pressure mechanisms.

Specific manifestations of this differentiation emerged throughout 2025. OpenAI faced regulatory scrutiny from the European Commission, the UK Information Commissioner's Office, and the Swiss Federal Data Protection Commissioner regarding data privacy practices, insufficient transparency concerning training data, and inadequate compliance mechanisms.

Anthropic, conversely, engaged constructively with regulatory initiatives, participated in voluntary industry safety commitments, and collaborated with academic researchers on alignment and interpretability research.

This divergence reflects not merely different corporate public relations strategies but rather fundamentally distinct organisational structures creating different incentive alignments concerning regulatory engagement.

Cause-and-Effect Analysis: Strategic Divergence and Commercial Outcomes

How Philosophical Choices Became Commercial Destiny—The Mechanics Behind Anthropic's Surprising Revenue Acceleration

The philosophical divergence between rapid innovation and safety prioritisation has produced cascading commercial consequences extending across multiple dimensions. OpenAI's velocity of product release—GPT-3 (2020), GPT-3.5 and ChatGPT (2022), GPT-4 (2023), GPT-4 Turbo (2024)—created substantial first-mover advantages in consumer mindshare.

The explosive adoption of ChatGPT established network effects and habitual usage patterns among 800 million weekly users, creating switching costs and competitive defensibility. This velocity, however, produced secondary consequences including regulatory backlash, compliance concerns, and enterprise apprehension regarding reliability and interpretability.

Anthropic's deliberate pacing—Claude 1 and 2 (2023), Claude 3 family (2024), Claude 3.5 and Claude Code (2025)—incorporated substantially longer intervals between major releases, permitting rigorous safety validation and enterprise feature development.

This pacing produced slower consumer adoption but facilitated deeper enterprise relationships, higher revenue per user, and substantially elevated customer retention and lifetime value. The market data demonstrates this dynamic empirically: Anthropic's revenue growth rate of 10-fold annually for three consecutive years substantially exceeds ChatGPT's consumer growth rate, despite lower absolute user counts.

The causal mechanism underlying this outcome reflects customer segmentation and organisational incentive alignment. Consumer customers optimise for capability, novelty, and user experience engagement—dimensions favoring OpenAI's rapid iteration approach. Enterprise customers optimise for reliability, compliance, interpretability, and long-term partnership stability—dimensions favoring Anthropic's deliberate approach.

OpenAI's organisational structure creates incentives to maximise addressable market size and user acquisition velocity, naturally producing consumer-orientation. Anthropic's Public Benefit Corporation structure creates incentives to maximise customer value and mission alignment, naturally producing enterprise-orientation.

These structural differences produce strategic outcomes that appear inevitable in retrospect rather than contingent upon particular leadership decisions.

Future Trajectory and Emerging Strategic Tensions

The Regulatory Reckoning—Why OpenAI's Speed Model Faces Increasing Headwinds From Governance Requirements

Both organisations project substantial growth through 2028. OpenAI targets $125 billion in annual revenue by 2029, achievable through consumer subscription conversion (targeting 220 million paid users from current 800 million weekly users), API monetisation, and advertising within search functionality.

This projection assumes sustained consumer preference for ChatGPT despite competitive pressure from Google Gemini (currently 18.2 percent market share, growing at 237 percent annually) and emerging alternatives including DeepSeek and Perplexity.

Anthropic projects $70 billion in annual revenue by 2028, achievable through enterprise API expansion, vertical solution development (e.g., Claude for Financial Services), and orchestrated integration into enterprise workflow platforms.

This projection assumes continued enterprise preference for models emphasising reliability and compliance—a reasonable assumption given regulatory trends strengthening data protection and AI governance requirements globally.

Emerging strategic tensions, however, present challenges for both organisations. For OpenAI, the principal tension involves sustaining growth as consumer adoption plateau effects become apparent and as regulatory pressure intensifies regarding data privacy, copyright compliance, and misinformation.

The advertising monetisation strategy, whilst theoretically capable of producing $200 billion annual revenue, encounters substantial technical and regulatory challenges regarding integration with existing Google advertising infrastructure.

Additionally, regulatory requirements from the European Union, United Kingdom, California, and emerging international jurisdictions increasingly mandate capability documentation, impact assessment, and governance mechanisms resembling Anthropic's built-in approach more closely than OpenAI's deployment-time approach.

For Anthropic, the principal tensions involve scaling manufacturing capacity for model training whilst maintaining safety discipline, navigating regulatory scrutiny concerning Amazon and Google investments creating potential conflicts of interest, and sustaining competitive differentiation as safety-conscious approaches become industry standard practice.

The company's ability to achieve $70 billion revenue projections depends critically upon converting Claude's enterprise reliability advantage into vertical-specific solution dominance before competitors incorporate constitutionally-inspired safety mechanisms into competing platforms.

Conclusion

Two Paths, One Destination—Why Both OpenAI and Anthropic May Be Right About Artificial Intelligence's Future

The contemporary artificial intelligence landscape reflects not merely competitive differentiation between OpenAI and Anthropic but rather the emergence of two viable models for developing transformative artificial intelligence systems.

OpenAI's model emphasises rapid capability advancement, mass-market accessibility, and iterative safety integration within deployment environments. Anthropic's model emphasises deliberate capability advancement, enterprise reliability, and proactive safety embedding within training architectures.

Both models have demonstrated commercial viability, though within distinct market segments reflecting different customer risk tolerance profiles.

The implications of this divergence extend substantially beyond corporate competition. The regulatory environment increasingly favours Anthropic's approach, with requirements for interpretability, compliance documentation, and governance transparency emerging across multiple jurisdictions.

Conversely, the consumer market—which represents vastly larger addressable size despite lower revenue per user—continues to reward OpenAI's capability-prioritising approach. Neither model demonstrates intrinsic superiority across all dimensions; rather, each model proves optimised for distinct customer populations and risk tolerance profiles.

The fundamental question animating both organisations remains unresolved: whether advanced artificial intelligence systems are most safely developed through rapid iteration in production environments or through deliberate engineering emphasising pre-deployment safety validation.

The commercial success of both approaches to date provides insufficient empirical basis for determining which methodology better ensures long-term safety and beneficial outcomes. The resolution of this question will substantially influence artificial intelligence governance, regulatory framework design, and the eventual distribution of benefits and risks arising from transformative AI systems.

The trajectory through 2026 and beyond will test these competing models rigorously. Should regulatory requirements continue strengthening toward the Anthropic model, OpenAI may confront substantial pressure to restructure governance and safety mechanisms. Should consumer preferences remain predominantly oriented toward capability maximisation, Anthropic may encounter competitive pressure requiring accelerated release schedules.

The outcome will determine not merely the market positioning of these organisations but the broader trajectory of artificial intelligence development and governance globally.