The Trillion-Dollar Gamble: Why AI's Infrastructure Crisis Will Define Technology's Next Decade - Part II

Executive Summary

The Artificial Intelligence Infrastructure Imperative: How OpenAI, Google, and Anthropic Are Reshaping the Global Data Center Landscape

The artificial intelligence revolution, whilst transformative in its technological implications, has precipitated an unprecedented infrastructure crisis that threatens to reshape global energy systems and financial markets. OpenAI, Google, and Anthropic have collectively committed over $625 billion towards constructing massive data centre facilities designed to power next-generation foundation models.

FAF scholarly examination elucidates how these three companies have diverged markedly in their infrastructure strategies, adoption of custom silicon technologies, and approaches to profitability derivation.

The analysis reveals a fundamental tension between the exponential capital requirements of AI infrastructure and the nascent revenue models designed to justify such investments.

Whilst these organisations have achieved remarkable computational efficiency gains and demonstrated compelling enterprise adoption metrics, they face formidable constraints emanating from power grid limitations, rapid hardware obsolescence cycles, and the profound mismatch between multi-year financing obligations and biennial chip replacement cycles.

The pathway to sustainable profitability requires not merely continued investment escalation, but rather a fundamental recalibration of infrastructure philosophy, energy procurement strategies, and customer Monetization frameworks.

Introduction

The Architecture of Artificial Intelligence Infrastructure: Understanding the Foundation

The infrastructure underpinning modern artificial intelligence systems constitutes far more than mere computational aggregation. Rather, it represents an entirely new category of industrial facility, merging characteristics of traditional power generation facilities, semiconductor manufacturing complexes, and high-performance computing centres into unified, hyperscale ecosystems.

These installations operate at previously unattainable densities of computational power, consuming electricity equivalent to small cities whilst simultaneously generating thermal loads that strain the capacity of conventional cooling methodologies.

The emergence of these facilities signals a fundamental inflection point in technological capital allocation, wherein cloud-native software companies have necessarily transformed into vertically-integrated infrastructure proprietors.

The three principal companies in this domain—OpenAI, Google, and Anthropic—have adopted divergent yet complementary strategies.

OpenAI, founded in 2015 and subsequently transformed into a capped-profit structure, has pursued an aggressive vertical integration approach wherein it retains primary operational control of massive proprietary data centre complexes whilst simultaneously leveraging partnerships with established infrastructure operators and component manufacturers.

Google, operating as a subsidiary of Alphabet, possesses pre-existing substantial data centre assets accumulated over two decades of cloud infrastructure development, thereby enabling it to deploy incremental capacity with greater financial flexibility.

Anthropic, the youngest of the three entities, has strategically positioned itself as a hybrid operator, maintaining partnerships with established cloud providers whilst simultaneously committing to proprietary infrastructure development.

These divergent trajectories, whilst superficially appearing contradictory, in fact represent nuanced responses to differing market positions, competitive pressures, and technological capabilities.

Historical Development and Contemporary Status: From Cloud Computing to AI Factories

The evolution of data centre infrastructure for artificial intelligence purposes evolved through identifiable phases, each representing distinct technological and commercial imperatives.

During the initial period spanning 2012 to 2020, deep learning workloads were predominantly executed on cloud provider infrastructure, specifically utilising NVIDIA graphics processing units procured through Amazon Web Services and Microsoft Azure.

This arrangement offered simplicity and capital efficiency, permitting AI development companies to externalize infrastructure complexity and focus exclusively on algorithmic advancement.

However, the emergence of transformer-based large language models, commencing with the 2017 introduction of the transformer architecture and accelerating dramatically following the November 2022 public release of ChatGPT, fundamentally altered this equilibrium.

The computational demands of training and serving contemporary foundation models exceeded the capacity of existing infrastructure, simultaneously revealing that the per-unit costs of cloud-based computing, when amortized across the massive scale required for competitive AI development, proved economically unsustainable for companies seeking to maintain proprietary model capabilities.

OpenAI's trajectory exemplifies this transition. Following substantial investment from Microsoft beginning in 2019, the company initially operated through Azure infrastructure, securing intellectual property rights to critical computational capacity whilst avoiding direct infrastructure ownership.

Nevertheless, by 2025, the magnitude of required computational resources—coupled with the necessity to achieve unit economic profitability at massive scale—necessitated the articulation of an unprecedented commitment.

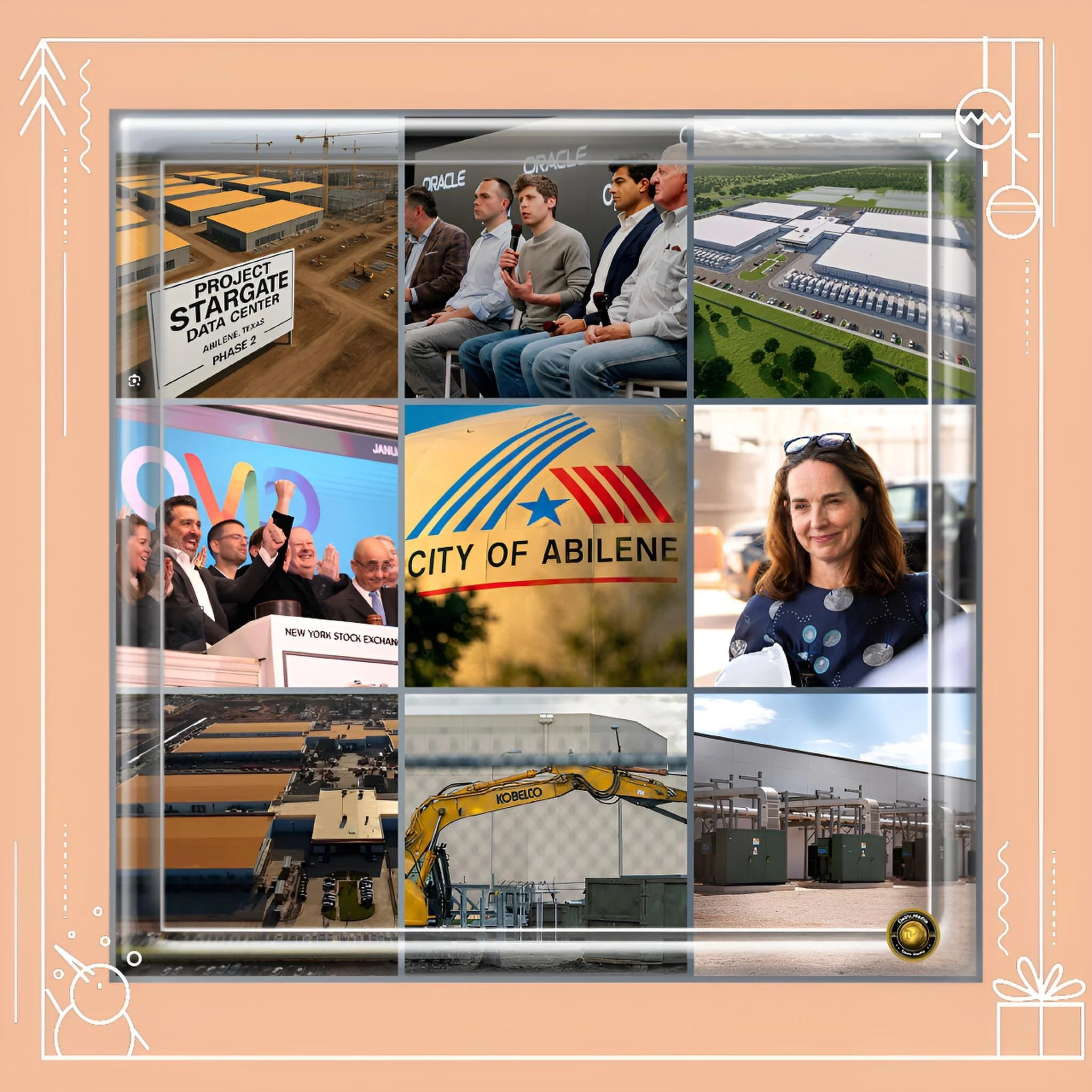

In January 2025, OpenAI, in conjunction with SoftBank and Oracle, announced the Stargate project, committing a nominal $500 billion across a decade for the development of ten gigawatts of computational capacity, predominantly located across United States geographies.

Google's trajectory diverged substantially. Possessing extensive pre-existing data centre infrastructure developed for search, advertising, and cloud services, the company possessed the requisite capital deployment mechanisms and real estate portfolio to expand incrementally.

In 2025, Google announced a $75 billion capital expenditure commitment for artificial intelligence infrastructure, representing a 43% year-over-year increase, coupled with strategic acquisitions of renewable energy assets including the $4.75 billion acquisition of Intersect Power, indicating a fundamental recalibration towards energy infrastructure ownership.

Anthropic's emergence as an independent entity occurred in 2021 following the departure of Dario Amodei and colleagues from OpenAI. Positioned explicitly as an alternative to OpenAI emphasizing constitutional artificial intelligence and safety research, the company initially operated through partnerships with established cloud providers.

However, demonstrating remarkable enterprise adoption velocity, Anthropic accumulated over 300,000 business customers within a remarkably compressed timeframe.

The November 2025 announcement of a $50 billion partnership with Fluidstack to construct custom data centres in Texas and New York reflected the company's determination to achieve infrastructure parity with competitors whilst maintaining operational independence.

Key Developments and Strategic Divergences: The Infrastructure Arms Race

The contemporary landscape of artificial intelligence infrastructure exhibits three distinct but interrelated strategic orientations, each reflecting different competitive philosophies and organisational capabilities.

OpenAI's Stargate Initiative and Custom Silicon Strategy

OpenAI's infrastructure commitment extends considerably beyond data centre construction. The company announced, in October 2025, a transformative partnership with Broadcom for the development and deployment of 10 gigawatts of custom artificial intelligence accelerators, estimated at $350 billion in aggregate value.

This development represents a fundamental departure from exclusive reliance upon NVIDIA's graphics processing units, which have historically dominated artificial intelligence computational infrastructure.

The custom silicon initiative permits OpenAI to reduce per-unit computational costs by an estimated 30 to 40 percent relative to general-purpose GPU acquisition and simultaneously reduces dependency upon a single vendor whose capacity constraints have repeatedly emerged as competitive bottlenecks.

The Stargate infrastructure expansion demonstrates remarkable acceleration, with multiple facilities already demonstrating operational capacity in late 2025. The Abilene, Texas campus, designated as the flagship facility, employed over 6,400 construction workers by mid-2025 and already hosts NVIDIA GB200 accelerators executing early-stage model training.

The subsequent announcement of five additional sites—three developed by Oracle in Texas, New Mexico, and the Midwest, and two developed by SoftBank in Ohio and Texas—positions the combined initiative to achieve seven gigawatts of capacity by end-2025, substantially ahead of the initially announced schedule.

Google's Efficiency-Oriented Custom Silicon and Energy Integration

Google's infrastructure philosophy diverges markedly from OpenAI's emphasis upon proprietary ownership and custom silicon development. Rather than pursuing full vertical integration, Google has strategically emphasised efficiency optimisation through its custom tensor processing unit ecosystem, energy integration partnerships, and hybrid deployment models.

The company's most recent innovation, the seventh-generation tensor processing unit designated Ironwood and announced in late 2025, demonstrates power efficiency improvements of approximately 30 times relative to Google's initial tensor processing unit introduced in 2018.

This trajectory of incremental improvement, whilst exceeding the performance gains of competing general-purpose processors, reflects Google's comfort with gradient-based optimisation rather than revolutionary architectural redesign.

Google's energy strategy, articulated through partnerships with NextEra Energy and the direct acquisition of renewable generation assets, demonstrates explicit recognition that energy procurement represents the binding constraint upon infrastructure expansion.

The $25 billion commitment for Pennsylvania infrastructure improvements, coupled with investments in hydropower facilities, reflects a sophisticated understanding that serving capacity—the ability to respond to user requests once models have been trained—increasingly determines competitive positioning.

As Amin Vahdat, the senior vice president responsible for Google's artificial intelligence infrastructure, articulated to employees in November 2025, Google must double its serving capacity every six months to accommodate demand growth, with an aspirational target of one-thousandfold scaling within four to five years.

Anthropic's Diversified Multi-Cloud Strategy

Anthropic has pursued a consciously differentiated approach emphasising diversification across multiple computational platforms and cloud providers rather than exclusive reliance upon proprietary infrastructure.

The company's infrastructure strategy encompasses three distinct computational pathways: Google Cloud tensor processing units (with commitments for over one million units anticipated in 2026), Amazon Web Services proprietary Trainium chips deployed through Project Rainier, and NVIDIA graphics processing units.

This deliberate portfolio approach optimises for cost, performance, and resilience across distinct computational tasks, particularly distinguishing between the fundamentally different requirements of model training, model inference, and research-oriented workloads.

The Fluidstack partnership announced in November 2025 commits Anthropic to $50 billion in infrastructure investment, with custom-built facilities located in Texas and New York, with additional sites under development.

Critically, this commitment does not represent a pivot away from cloud partnerships; rather, it constitutes an additional layer within a diversified infrastructure ecosystem.

Anthropic's multi-platform strategy, whilst potentially appearing duplicative relative to OpenAI's more vertically integrated approach, provides strategic advantages including reduced vendor dependency, enhanced negotiating leverage, and optimisation of cost structures across heterogeneous workloads.

Contemporary Status and Latest Developments: The Profitability Paradox

The contemporary infrastructure landscape reveals a fundamental and largely unacknowledged tension between the unprecedented capital deployment occurring throughout 2025 and the nascent capacity of these companies to generate sustainable profitability justifying such investments. OpenAI's financial position exemplifies this duality.

The company achieved approximately $12.7 billion in projected revenue for 2025, growing from approximately $3.7 billion in 2024, representing a compound annual growth rate of approximately 182 percent across a two-year interval. This extraordinary growth trajectory, unmatched in the modern history of enterprise software, masks underlying unit economic challenges.

The company's compute profit margin—defined as the percentage of revenue retained following deduction of all infrastructure, electricity, maintenance, and computational overhead costs—reached approximately 70 percent by October 2025, having grown substantially from 52 percent at the close of 2024.

This metric represents genuinely impressive operational efficiency, exceeding typical profitability margins for established software providers and suggesting that the core artificial intelligence service delivery mechanism has achieved genuine profitability.

However, this operational profitability masks the brutal reality that the company faces negative operating cash flow projected at approximately $9 billion for 2025 when capital expenditures are incorporated, reflecting the company's strategic decision to prioritize infrastructure investment over near-term shareholder returns.

Google's financial position, whilst substantially stronger in absolute terms given Alphabet's dominant market position and historical profitability, similarly confronts profound questions regarding infrastructure capital allocation.

The company's announcement that it must double artificial intelligence serving capacity every six months to accommodate demand, coupled with a $75 billion annual infrastructure commitment, represents a structural shift in capital allocation priorities.

Whilst such commitments remain financially sustainable given Google's enormous revenue base and historically robust margins, the velocity of infrastructure spending far exceeds historical precedent and demands correspondingly accelerated revenue growth to justify continued expansion.

The financing ecology surrounding artificial intelligence infrastructure has undergone dramatic transformation during 2025.

Aggregate data centre financing, incorporating both debt and equity instruments, reached approximately $125 billion in 2025, a more than eightfold increase from the $15 billion in financing observed during the comparable period in 2024.

This explosive growth in debt-financed infrastructure reflects conscious decisions by large technology companies to offload infrastructure-related liabilities through sophisticated financial instruments, reducing the accounting impact upon their consolidated balance sheets.

Companies including Meta, Oracle, and xAI have established special purpose vehicles and engaged private credit markets to finance infrastructure expansion, a strategic innovation that permits rapid infrastructure deployment whilst minimizing the accounting visibility of associated liabilities.

Causal Mechanisms and Consequences: Why Infrastructure Expansion Accelerates Despite Economic Uncertainty

The apparent paradox of accelerating infrastructure investment in the face of substantive questions regarding profitability reflects several interconnected causal mechanisms, each rendering continued expansion seemingly inevitable despite considerable financial risk.

The first and most fundamental mechanism reflects the competitive nature of artificial intelligence development itself. The generation of novel capabilities in contemporary foundation models demonstrates clear correlation with computational resource magnitude.

OpenAI's GPT-5 model, released in 2025, exhibited substantially superior performance characteristics relative to its predecessor, improvements that materialised coincidentally with the deployment of dramatically augmented computational capacity.

This empirical observation, whether reflecting true causal relationships or merely correlation, nonetheless establishes a competitive imperative wherein the company manifestly incapable of matching competitors' computational resources faces inevitable technological obsolescence.

From this perspective, the Stargate investment, irrespective of its financial rationality from a traditional capital budgeting perspective, reflects a competitive necessity. Failure to invest at comparable scale risks permanent competitive disadvantage, a prospect that organisational leadership understandably regard as intolerable.

The second causal mechanism reflects the exponential growth in enterprise demand for artificial intelligence services.

Anthropic reported that large enterprise accounts—defined as customers generating over $100,000 in annualized revenue—grew nearly sevenfold within the preceding year, representing genuinely exceptional customer acquisition dynamics.

OpenAI disclosed that Fortune 500 company adoption reached 92 percent, an extraordinary penetration rate that presages continued demand growth as artificial intelligence capabilities diffuse throughout corporate operations.

From the perspective of service providers, infrastructure investment responds to genuine customer demand rather than speculative excess; companies are fundamentally constrained in their capacity to serve customers by available computational resources.

The third causal mechanism reflects technological trajectory confidence, particularly regarding the continued validity of the transformer architecture and the scaling laws first articulated by Chinchilla and later expanded through subsequent research.

If the fundamental assumptions underlying these scaling laws remain valid—that model capability correlates reliably with computational scale—then current infrastructure investment represents not excessive speculation but rather rational response to legitimate demand for increasingly capable systems.

However, a fourth mechanism reflects the financial structures and incentive systems through which infrastructure expansion is financed. The emergence of sophisticated financial instruments, special purpose vehicles, and private credit markets has, paradoxically, made infrastructure investment increasingly attractive from a corporate finance perspective.

Rather than consuming balance sheet capacity and reducing reported profitability, carefully structured infrastructure financing permits rapid expansion whilst minimizing accounting visibility. This structural evolution, whilst legitimate from a financial engineering perspective, introduces profound moral hazard whereby infrastructure expansion decisions may become disconnected from underlying economic rationality.

Limitations and Overcoming Constraints: The Path Forward

The artificial intelligence infrastructure boom confronts multiple binding constraints, each potentially limiting the pace and magnitude of continued expansion. These limitations and the mechanisms through which they are being overcome provide critical insight into the future trajectory of infrastructure development.

Power and Energy Constraints

The most acute and universally acknowledged limitation reflects grid capacity and energy availability. Data centre facilities utilising state-of-the-art artificial intelligence accelerators consume electricity measured in hundreds of megawatts per facility, comparable to the power consumption of small cities.

These demands arrive in geographies where transmission infrastructure, designed decades prior, fundamentally lacks capacity for such load growth. The United States energy system, despite decades of technological advancement, exhibits transmission expansion rates that dramatically lag load growth.

The Federal Energy Regulatory Commission's interconnection queues for new generation and storage projects extend across years-long timelines, rendering traditional utility expansion pathways incapable of supporting AI infrastructure demands.

The mechanism through which this constraint is being overcome represents a fundamental recalibration of infrastructure development philosophy. Rather than awaiting utility expansion, artificial intelligence companies increasingly deploy on-site power generation, including advanced natural gas turbines, small modular nuclear reactors, and proprietary fuel cell technologies.

Microsoft's recommissioning of the Three Mile Island nuclear facility to provide 819 megawatts of power exemplifies this strategy.

Elon Musk's Memphis data centre facility, developed by xAI, employed advanced gas turbine-based power provision through VoltaGrid whilst awaiting utility expansion.

These solutions, whilst introducing operational complexity and marginal cost increases, effectively circumvent the binding constraint imposed by grid capacity limitations.

Google's strategic acquisition of renewable energy assets and the establishment of energy partnerships with NextEra Energy reflects a more sophisticated approach wherein energy generation becomes integrated with data centre development rather than treated as a secondary consideration.

By directly controlling energy supply, cloud providers transform energy from a commodity procurement challenge into a controlled variable within infrastructure optimisation.

Thermal Management and Cooling

The second critical constraint reflects thermal management requirements within high-density computational facilities. Traditional air-based cooling, adequate for conventional data centre operations, proves insufficient for artificial intelligence workloads wherein individual accelerators consume kilowatts of power within compact spatial arrangements.

Cooling alone consumes 30 to 40 percent of traditional data centre electrical consumption and becomes an even more critical constraint within advanced facilities. Direct-to-chip liquid cooling approaches reduce cooling-associated energy consumption by up to 30 percent relative to conventional air-based systems, partially offsetting the thermal challenges introduced by ever-increasing computational density.

The trajectory of cooling technology adoption, incorporating immersion cooling, closed-loop liquid cooling, and innovative heat recovery approaches, indicates that thermal management, whilst remaining a constraint, is being progressively mitigated through technological innovation and design optimisation.

Chip Obsolescence and Financing Mismatch

Perhaps the most subtle yet potentially most consequential limitation reflects the profound mismatch between chip economic lifecycles and infrastructure financing structures. Artificial intelligence accelerators, whether NVIDIA graphics processing units or custom silicon alternatives, achieve economically productive lifecycles of approximately 18 to 36 months before technological obsolescence renders them suboptimal relative to subsequent generations.

NVIDIA's transition from Hopper generation chips (2022) to Blackwell (2024) and anticipated Rubin generation (2026) demonstrates annual product cycle acceleration, with each generation delivering approximately 10-fold performance improvements.

This velocity of technological change renders accelerators deployed in 2024 potentially near-obsolete by 2028, yet infrastructure financing, encompassing real estate leases, power contracts, and equipment financing, frequently extends across 10 to 30 year horizons.

This lifecycle mismatch introduces profound financial fragility. Should computational demands evolve differently than anticipated, or should technological trajectories diverge from current assumptions, companies may find themselves committed to long-term lease and power obligations whilst possessing hardware increasingly inadequate for competitive operation.

The solution to this constraint, still in nascent stages of implementation, involves designing data centre facilities explicitly for rapid hardware churn, establishing financing structures aligned with technological replacement cycles rather than physical real estate timespans, and developing equipment refresh policies that explicitly anticipate two-year technological lifecycles rather than treating extended equipment lifecycles as financially positive developments.

Water Availability and Environmental Constraints

Cooling infrastructure, whether liquid-based or air-based, requires substantial freshwater resources. Current projections anticipate artificial intelligence data centre freshwater demand reaching 1.7 trillion gallons annually by 2027, introduction-level constraints in water-scarce geographies.

Geographic dispersion of infrastructure development, strategic site selection emphasising water availability, closed-loop cooling systems minimising freshwater consumption, and wastewater treatment innovation represent mechanisms through which this constraint is being addressed.

Future Trajectory and Strategic Implications: The Next Phase of Infrastructure Competition

The artificial intelligence infrastructure landscape will undergo substantial evolution throughout 2026 and beyond, driven by competitive pressures, technological innovation, and financial realities.

OpenAI's projection of $29.4 billion in revenue for 2026, representing growth from the 2025 baseline, suggests continued acceleration in customer adoption and utilisation. Anthropic, possessing perhaps the strongest enterprise positioning, will likely continue expansion of its multi-cloud infrastructure portfolio.

Google, leveraging its historical data centre expertise and energy procurement capabilities, will progressively emphasise serving capacity and custom silicon optimisation over raw compute expansion.

The trajectory of custom silicon adoption will prove consequential. OpenAI's Broadcom partnership for custom accelerators, Google's Ironwood tensor processing unit trajectory, Amazon's Trainium development, and Meta's reported negotiations for Google tensor processing unit rentals collectively indicate a fundamental strategic pivot away from exclusive reliance upon NVIDIA's general-purpose accelerators. This shift, when coupled with the cost savings achievable through custom silicon (30 to 40% relative to equivalent NVIDIA capacity), introduces profound implications for semiconductor industry dynamics and the sustainability of NVIDIA's historical margin structures.

Financial sustainability will emerge as the critical focal point. The industry requires approximately $400 billion in annual revenue, assuming 25 percent gross margins, merely to cover the depreciation associated with 2025 infrastructure investments plus a modest 15 percent return on capital. Current revenue, estimated between $20 and $40 billion, falls dramatically short of this requirement.

Either revenue must scale by 10-fold from contemporary levels, infrastructure investment must decelerate substantially, or the fundamental economics of infrastructure utilisation must undergo recalibration through efficiency improvements and pricing optimisation.

Conclusion: Infrastructure as the Defining Competitive Frontier

The contemporary artificial intelligence landscape represents a fundamental departure from the historical pattern whereby intellectual capital and algorithmic innovation constituted the primary competitive differentiation. Rather, infrastructure—the ability to accumulate and operationalise massive computational resources—has emerged as the defining competitive frontier. OpenAI's $625 billion infrastructure commitment, when combined with Google's $75 billion annual expenditure and Anthropic's $50 billion partnership, collectively represent the largest coordinated technology infrastructure deployment in human history.

These three companies have adopted fundamentally divergent approaches reflecting their distinct competitive positions, technological philosophies, and financial circumstances.

OpenAI's vertical integration strategy, emphasising proprietary facilities and custom silicon, reflects the company's necessity to achieve complete control over computational resources upon which its competitive positioning depends.

Google's efficiency-oriented approach, leveraging custom silicon and energy integration, reflects the company's historical strength in infrastructure development and its capacity to optimise incrementally rather than redesign fundamentally.

Anthropic's diversified multi-cloud strategy reflects the company's recognition that complete infrastructure ownership, whilst strategically desirable, imposes financial burdens that multi-cloud diversification can mitigate.

The pathway from current circumstances to sustainable profitability remains unclear, dependent upon continued acceleration of enterprise adoption, realisation of pricing power sufficient to sustain premium margins despite competitive pressure, and technological trajectories that continue validating historical scaling laws.

The profound questions regarding chip obsolescence, energy availability, and capital return requirements will determine whether contemporary infrastructure investment ultimately proves visionary or speculative.

Nonetheless, the strategic imperative driving infrastructure expansion remains compelling. The competitive nature of artificial intelligence development, the exponential growth in legitimate customer demand, and the apparent correlation between computational scale and capability advancement collectively render continued infrastructure investment seemingly inevitable, irrespective of underlying financial uncertainty.

The artificial intelligence infrastructure boom, for better or worse, appears destined to continue unabated throughout 2026 and beyond, reshaping global energy systems, financial markets, and technological competition in ways whose full implications remain subject to profound uncertainty.