The Paradox of Power: AI-Generated Imagery and Policy Shifts in the Trump Administration

Introduction

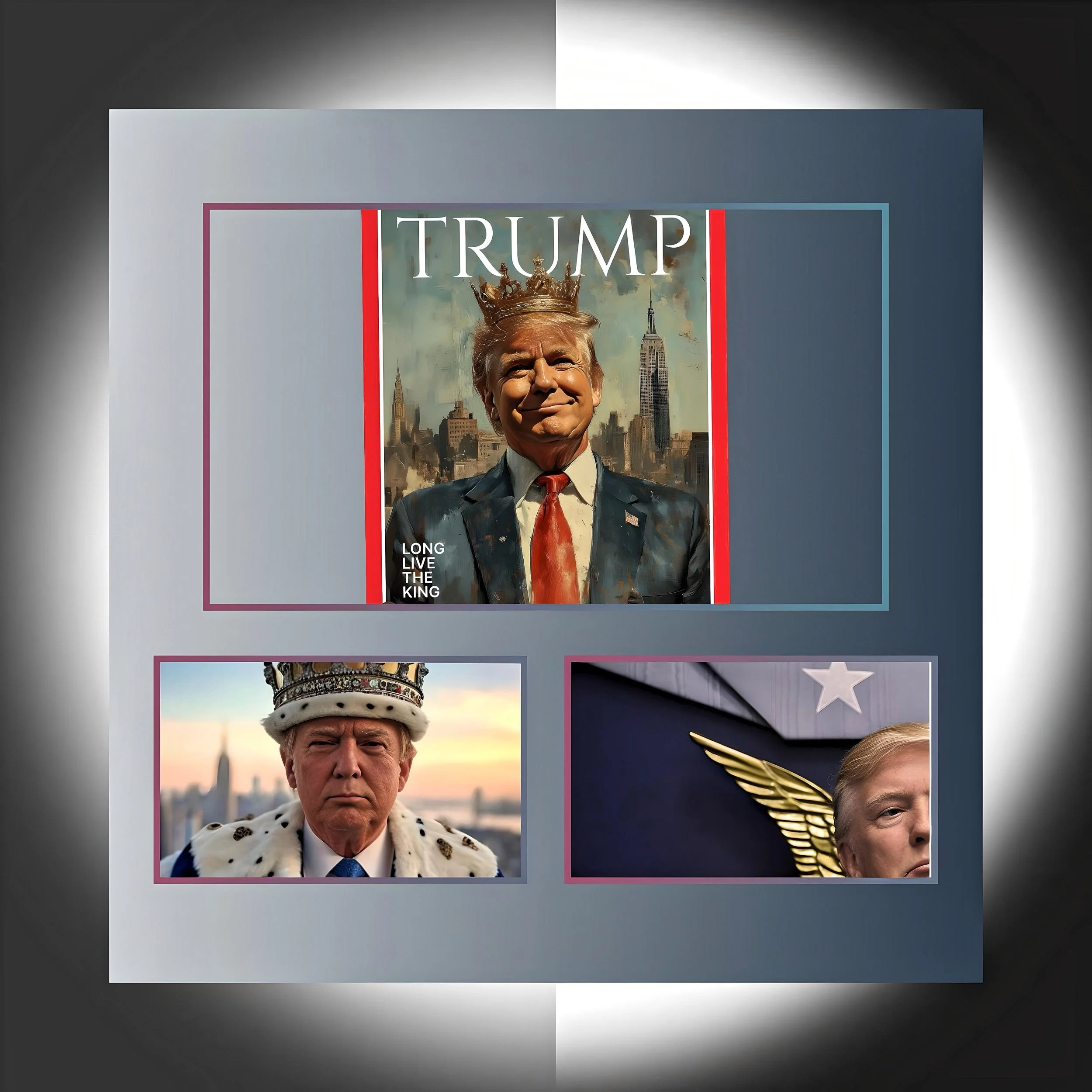

The White House’s release of an AI-generated image depicting former President Donald Trump as a monarch, captioned “Long Live the King,” has ignited a firestorm over the ethical use of artificial intelligence in governance and political campaigning.

This incident, occurring on February 19, 2025, coincided with Trump’s celebration of his administration’s revocation of New York City’s congestion pricing program—a move framed as a populist victory but criticized as an overreach of federal authority.

The controversy underscores a broader tension within the Trump administration: while publicly warning of AI’s dangers, its operatives have simultaneously weaponized the technology for political gain, even as federal AI safety initiatives face systematic dismantling.

The “Long Live the King” Controversy: AI as a Tool of Political Theater

Genesis of the AI-Generated Monarch Imagery

On February 19, 2025, the White House’s official social media accounts shared a fabricated Time magazine cover featuring Trump adorned with a crown and the caption “LONG LIVE THE KING”.

This image, later confirmed to be AI-generated, accompanied Trump’s Truth Social post declaring victory over New York’s congestion pricing initiative: “CONGESTION PRICING IS DEAD. Manhattan, and all of New York, is SAVED. LONG LIVE THE KING!”.

The visual rhetoric drew immediate comparisons to autocratic regimes, with New York Governor Kathy Hochul retorting, “We are a nation of laws, not ruled by a king”.

Public and Institutional Backlash

The imagery sparked bipartisan condemnation.

Critics highlighted the incongruity of a democratically elected leader invoking monarchical symbolism, particularly through AI-manipulated content disseminated via official channels.

On social media, users mocked the post as “embarrassing and disrespectful to our country,” while journalists noted the irony of deploying AI—a technology Trump has labeled “dangerous”—to amplify authoritarian aesthetics.

Governor Hochul’s administration filed a lawsuit challenging the congestion pricing reversal, framing it as an unconstitutional federal intrusion into state affairs.

AI’s Role in Redefining Presidential Messaging

This incident reflects a strategic shift in political communication under Trump’s second term.

By leveraging AI to craft visually arresting, emotionally charged content, the administration bypasses traditional media filters, directly shaping narratives for its base.

However, the lack of transparency around AI-generated posts blurs the line between official statements and partisan propaganda, raising concerns about the erosion of institutional trust.

Trump’s Paradoxical Stance on AI: Warnings Versus Weaponization

Public Warnings About AI Risks

Trump has repeatedly framed AI as an existential threat.

In a 2024 Fox Business interview, he called it “maybe the most dangerous thing out there,” citing risks like deepfake-driven election interference and financial system manipulation.

These concerns echoed bipartisan anxieties about AI-generated disinformation, exemplified by a 2023 fake “Trump arrest” photo campaign and a 2024 robocall mimicking President Biden.

Trump’s campaign even accused Kamala Harris of using AI to inflate rally attendance—a claim debunked by eyewitness accounts.

Campaign Adoption of AI Tactics

Despite these warnings, Trump’s political apparatus has aggressively exploited AI. Examples include:

Deepfakes targeting Black voters

Supporters circulated AI-generated images of Trump alongside African Americans, falsely implying widespread Black support for his 2024 candidacy.

Meme warfare

Trump’s Truth Social account shared AI-created visuals of Taylor Swift endorsing him and Kamala Harris addressing Soviet-style rallies—content designed to viralize conspiratorial narratives.

Satirical manipulation

A Michigan-based Trump supporter’s AI image of the former president meeting Black voters on a porch garnered 1.3 million views on X, with many mistaking it for genuine.

This duality—decrying AI’s dangers while deploying it opportunistically—highlights a calculated approach: framing adversaries’ AI use as malicious while normalizing its role in conservative messaging.

Policy Shifts: Dismantling AI Safeguards for “Innovation”

Revocation of Biden-Era AI Regulations

On January 24, 2025, Trump signed an executive order overturning President Biden’s 2023 AI safety framework, which had mandated risk assessments for federal AI systems and equity considerations in procurement.

The Trump administration denounced these rules as “radical leftwing ideas” that stifle innovation, instead prioritizing “AI development rooted in free speech and human flourishing”.

Accompanying directives ordered a review of all AI-related policies enacted under Biden, focusing on eliminating “ideological bias”.

Targeting the AI Safety Infrastructure

The administration’s February 2025 purge of probationary staff at the U.S. AI Safety Institute (AISI)—a Biden-era initiative—signaled a broader retreat from safety-focused governance.

Vice President JD Vance crystallized this shift at the Paris AI Action Summit, stating, “The future of AI won’t be won by hand-wringing about safety”.

Concurrently, the U.K. renamed its AI Safety Institute to remove “safety,” reflecting transnational deregulatory trends under conservative governments.

Implications for National Security and Democracy

Critics argue that defanging AI oversight accelerates risks

Election integrity

Reduced safeguards against deepfakes could intensify disinformation in the 2026 midterms.

Cybersecurity gaps

The 2024 Iranian hack of Trump’s campaign, which exploited AI-driven vulnerabilities, underscores systemic risks.

Market instability

Unchecked AI in financial algorithms may amplify systemic shocks, a concern Trump previously highlighted.

Yet the administration counters that deregulation spurs private-sector innovation, citing U.S. tech firms’ lead in generative AI tools like ChatGPT.

The Normalization of AI in Political Campaigns

Case Study: The 2024 Election

Trump’s 2024 campaign pioneered AI-driven outreach:

Microtargeting

AI analyzed voter data to personalize ads, exploiting psychological profiles for maximal engagement.

Synthetic media

Supporters like conservative radio host Mark Kaye created AI images of Trump with Black voters, later admitting, “I’m not claiming it’s accurate”.

Counterfactual narratives

AI-generated visuals depicted “scenes” from alternative realities, such as Trump attending a “Stop the Steal” rally he never physically visited.

These tactics, while legally permissible under lax disclosure laws, erode shared factual frameworks, enabling parallel information ecosystems.

Global Authoritarian Playbooks

Trump’s AI strategy mirrors tactics employed by China and Russia, where state-aligned actors use synthetic media to glorify leaders and smear dissidents. The “Long Live the King” imagery, for instance, evokes North Korea’s deification of the Kim dynasty—a comparison critics seized upon.

Legal and Ethical Crossroads

First Amendment Challenges

The Supreme Court may soon grapple with whether AI-generated political content qualifies as free speech or defamation.

Legal scholars note that existing precedents, like New York Times v. Sullivan, inadequately address synthetic media’s unique capacity to deceive.

Proposed Legislative Remedies

Bipartisan bills, such as the AI Transparency Act, would mandate watermarks for AI content.

However, Trump’s veto threat and Vance’s Senate filibuster have stalled progress.

Ethical Dilemmas for Platforms

Meta and X face mounting pressure to label AI posts, yet their policies remain inconsistent.

Trump’s Truth Social, which permits unmarked AI content, exemplifies this regulatory void.

Conclusion: Navigating the AI-Political Complex

The “Long Live the King” saga epitomizes a transformative era where AI reshapes political power dynamics.

While Trump’s administration harnesses AI to consolidate messaging control, its erosion of safety protocols risks unleashing destabilizing forces—from election meddling to financial fraud.

The path forward demands a recalibration: fostering innovation without sacrificing democratic accountability, perhaps through independent AI ethics boards or international accords.

As synthetic media becomes ubiquitous, the resilience of democratic institutions hinges on transparent norms that distinguish governance from spectacle, and truth from algorithmically engineered persuasion.